-

Discover kdb+ 4.1’s New Features

20 February, 2024

-

Democratizing Financial Services: Tier 1 Tooling Without the Expense

5 February, 2024

-

Transforming Enterprise AI with KDB.AI on LangChain

29 January, 2024

-

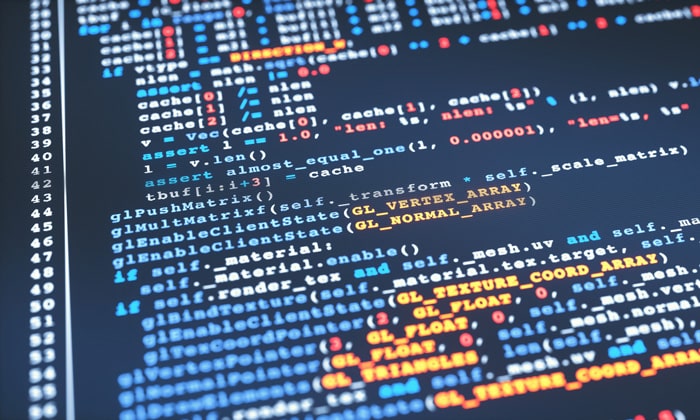

How to Build and Manage Databases Using PyKX

24 January, 2024

-

Real-Time Data, SaaS, and the Rise of Python – a Prediction Retrospective

19 December, 2023

-

Elevate Your Data Strategy With KDB.AI Server Edition

29 November, 2023

-

Implementing RAG with KDB.AI and LangChain

15 November, 2023

-

Cultivate Sustainable Computing With the Right ‘per Watt’ Metrics

6 November, 2023

-

PyKX Highlights 2023

25 October, 2023

-

3 Steps To Make Your Generative AI Transformative Use Cases Sticky

25 October, 2023

-

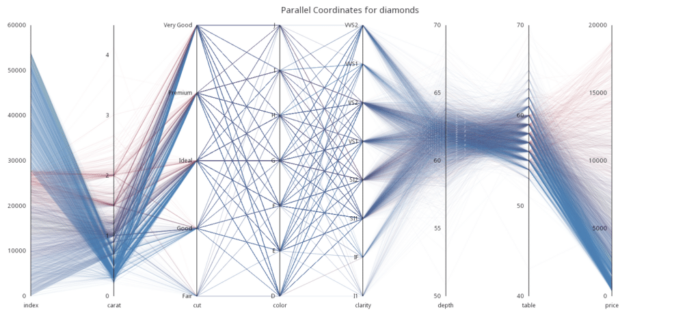

The Power of Parallelism within kdb

12 October, 2023

-

KX Releases the kdb Visual Studio Code Extension

5 October, 2023

GPU-accelerated deep learning: Architecting agentic systems

Discover how GPU-accelerated deep learning transforms financial research and analysis by handling petabyte-scale data with precision and speed.