by Steve Wilcockson

Two Stars Collide: Thursday at KX CON [23]

My favorite line that drew audible gasps at the opening day at the packed KX CON [23]

“I don’t work in q, but beautiful beautiful Python” said Erin Stanton of Virtu Financial simply and eloquently. As the q devotees in the audience chuckled, she qualified her statement further “I’m a data scientist. I love Python.”

The q devotees had their moments later however when Pierre Kovalev of the KX Core Team Developer didn’t show Powerpoint, but 14 rounds of q, interactively swapping characters in his code on the fly to demonstrate key language concepts. The audience lapped up the q show, it was brilliant.

Before I return to how Python and kdb/q stars collide, I’ll note the many announcements during the day, which are covered elsewhere and to which I may return in a later blog. They include:

Also, Kevin Webster of Columbia University and Imperial College highlighted the critical role of kdb in price impact work. He referenced many of my favorite price impact academics, many hailing from the great Capital Fund Management (CFM).

Yet the compelling theme throughout Thursday at KX CON [23] was the remarkable blend of the dedicated, hyper-efficient kdb/q and data science creativity offered up by Python.

Erin’s Story

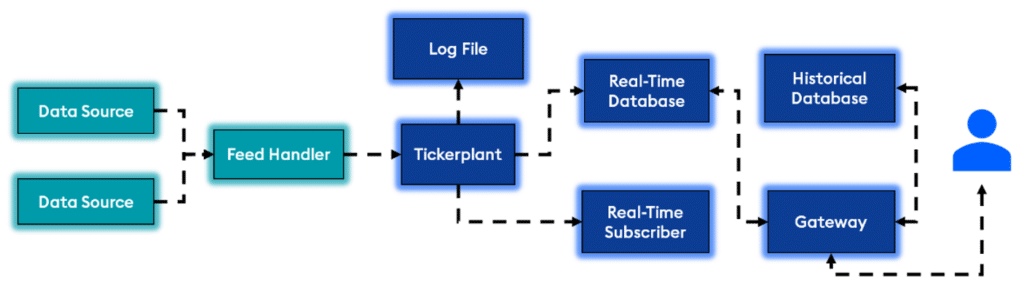

For me, Erin Stanton’s story was absolutely compelling. Her team at broker Virtu Financial had converted a few years back what seemed to be largely static, formulaic SQL applications into meaningful research applications. The new generation of apps was built with Python, kdb behind the scenes serving up clean, consistent data efficiently and quickly.

“For me as a data scientist, a Python app was like Xmas morning. But the secret sauce was kdb underneath. I want clean data for my Python, and I did not have that problem any more. One example, I had a SQL report that took 8 hours. It takes 5 minutes in Python and kdb.”

The Virtu story shows Python/kdb interoperability. Python allows them to express analytics, most notably machine learning models (random forests had more mentions in 30 minutes than I’ve heard in a year working at KX, which was an utter delight! I’ve missed them). Her team could apply their models to data sets amounting to 75k orders a day, in one case 6 million orders over a 4 months data period, an unusual time horizon but one which covered differing market volatilities for training and key feature extraction. They could specify different, shorter time horizons, apply different decision metrics. ”I never have problems pulling the data.” The result: feature engineering for machine learning models that drives better prediction and greater client value. With this, Virtu Financial have been able to “provide machine learning as a service to the buyside… We give them a feature engineering model set relevant to their situation!,” driven by Python, data served up by kdb.

The Highest Frequency Hedge Fund Story

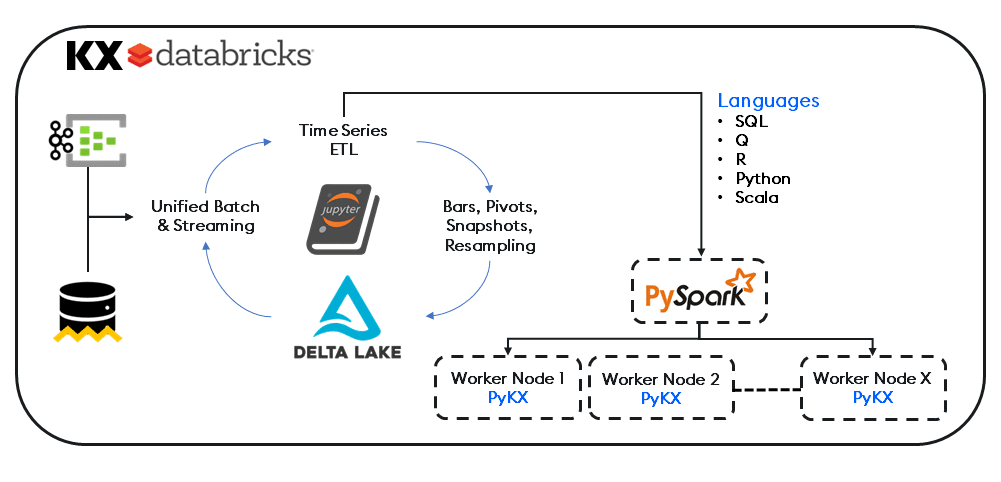

I won’t name the second speaker, but let’s just say they’re leaders on the high-tech algorithmic buy-side. They want Python to exhibit q-level performance. That way, their technical teams can use Python-grade utilities that can deliver real-time event processing and a wealth of analytics. For them, 80 to 100 nodes could process a breathtaking trillion+ events per day, serviced by a sizeable set of Python-led computational engines.

Overcoming the perceived hurdle of expressive yet challenging q at the hedge fund, PyKX bridges Python to the power of kdb/q. Their traders, quant researchers and software engineers could embed kdb+ capabilities to deliver very acceptable performance for the majority of their (interconnected, graph-node implemented) Python-led use cases. With no need for C++ plug-ins, Python controls the program flow. Behind-the-scenes, the process of conversion between NumPy, pandas, arrow and kdb objects is abstracted away.

This is a really powerful use case from a leader in its field, showing how kdb can be embedded directly into Python applications for real-time, ultra-fast analytics and processing.

Alex’s Story

Alex Donohoe of TD Securities took another angle for his exploration of Python & kdb. For one thing, he worked with over-the-counter products (FX and fixed income primarily) which meant “very dirty data compared to equities.” However, the primary impact was to explore how Python and kdb could drive successful collaboration across his teams, from data scientists and engineers to domain experts, sales teams and IT teams.

Alex’s personal story was fascinating. As a physics graduate, he’d reluctantly picked up kdb in a former life, “can’t I just take this data and stick it somewhere else, e.g., MATLAB?”

He stuck with kdb.

“I grew to love it, the cleanliness of the [q] language,” “very elegant for joins” On joining TD, he was forced to go without and worked with Pandas, but he built his ecosystem in such a way that he could integrate with kdb at a later date, which he and his team indeed did. His journey therefore had gone from “not really liking kdb very much at all to really enjoying it, to missing it”, appreciating its ability to handle difficult maths efficiently, for example “you do need a lot of compute to look at flow toxicity.” He learnt that Python could offer interesting signals out of the box including non high-frequency signals, was great for plumbing, yet kdb remained unsurpassed for its number crunching.

Having finally introduced kdb to TD, he’s careful to promote it well and wisely. “I want more kdb so I choose to reduce the barriers to entry.” His teams mostly start with Python, but they move into kdb as the problems hit the kdb sweet spot.

On his kdb and Python journey, he noted some interesting, perhaps surprising, findings. “Python data explorers are not good. I can’t see timestamps. I have to copy & paste to Excel, painfully. Frictions add up quickly.” He felt “kdb data inspection was much better.” From a Java perspective too, he looks forward to mimicking the developmental capabilities of Java when able to use kdb in VS Code.”

Overall, he loved that data engineers, quants and electronic traders could leverage Python, but draw on his kdb developers to further support them. Downstream risk, compliance and sales teams could also more easily derive meaningful insights more quickly, particularly important as they became more data aware wanting to serve themselves.

Thursday at KX CON [23]

The first day of KX CON [23] was brilliant. a great swathe of great announcements, and superb presentations. For me, the highlight was the different stories of how when Python and kdb stars align, magic happens, while the q devotees saw some brilliant q code.