Introduction

Databases lie at the heart of kdb+. The ability to quickly process large amounts of on-disk data separates kdb+ from its competitors. Database design evolves over time and any q developer will inevitably have to make schema changes to historical databases. The dbmaint utility makes this easy.

In this blog we will expand upon the concepts discussed in a previous blog, Kdb+/q Insights: Scripting with q, and explore some concepts on how we might set up a framework for running maintenance against tables stored in a partitioned database. [1]

Use Case

Our objective is to build a non-interactive script to run some maintenance tasks against a database, adding new columns to a table, renaming columns and changing column types.

The following section assumes presence of an HDB with some trade data based on ‘setuphdb.q’ located on GitHub. This script can be used to mock up a database with some trade data for usage in this scenario; based on a Q For Mortals example by Jeffry Borror.

q setuphdb.q -db hdbWith our trade data on disk, we can begin running some maintenance tasks, for example we may want to add a column showing the ticker ‘industry’, rename ‘px’ to ‘price’ for readability and demonstrate the conversion of a column from one type to another.

2#select from trades where date=2015.01.10

date tm sym qty price val

--------------------------------------------------------------------

2015.01.01 00:00:00.395 ibm 1050 213 223650

2015.01.01 00:00:01.059 goog 1430 643.68 920462.4Preparing wrapper script

We will start by creating a simple shell wrapper script that will ensure our maintenance process is limited to running on a single core; this would be useful in a production environment to prevent our task from impacting other processes on our server.

This script will run a non-interactive q shell for us, passing in command line args/options (using ‘$*’), including our database and q scripts.

#!/bin/sh

taskset -c 0 q ./dbmaint.q $* -c 2000 2000 <<< 'l hdbmaint.q'

exit 0Note, we could run tasket directly with q on the command line, however storing the command in a script is useful for reusability (such as scheduling as you’ll see later on).

See Github for full script.

Preparing maintenance script

To begin we will define some logging functions as seen in the previous blog to help output standardized messages during our script execution:

/// Logging utilities

d .log

print:{(-1)(" " sv string (.z.D;.z.T)),x;};

out:{[x]print[": INFO : ",x]};

err:{[x]print[": ERROR : ",x]};

errexit:{err x;err"Exiting";exit 1};

sucexit:{out "Maintenance complete"; out "Success. Exiting";exit 0};

usage:{[x] errexit "Missing param(s) Usage: hdbmaint.q "," " sv "-",'string distinct `db`action,x };

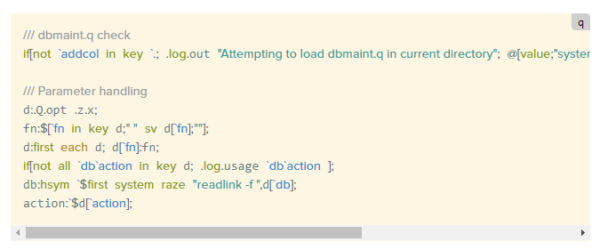

d .We will add a check to load dbmaint.q, should it not be passed in by our bash wrapper [2]. and the capture and check that a ‘db’ directory has been passed to our script, along with an ‘action’.

We will also add special handling for functions noted as ‘fn’ passed into our scripts which are passed to our script as strings to be evaluated.

/// dbmaint.q check

if[not `addcol in key `.; .log.out "Attempting to load dbmaint.q in current directory"; @[system;"l ./dbmaint.q"; {.log.errexit "Could not load dbmaint.q",x}]];

/// Parameter handling

d:.Q.opt .z.x;

if[not all `db`action in key d; .log.usage `db`action];

d:(first each d),$[`fn in key d;enlist[`fn]!enlist " " sv d[`fn];()];

d:{x[y]:`$x[y];x}[d;key[d] except `db`fn];

d[`db]:hsym `$first system raze "readlink -f ",d[`db];