by Steve Wilcockson

I’ve spent much of my working life trying to avoid wearing suits, management consultants and Gartner. When asked to attend the Gartner Data & Analytics Summit in London (May 22-24, 2023), I sucked my teeth and didn’t buy a suit. Happily at the Summit, sneakers were plentiful, though so too were suits, and I *really* enjoyed this event no matter how much I wanted to not to. Here are my ten key takeaways with some concluding observations

Takeaway 1: “You are already thinking about compute…. You’re going to be thinking about it a whole lot more”

Professor Chris Miller discussed how Moore’s Law is now broken, and compute innovation enabled through semiconductor technology is being politicized by governments across the world amidst geopolitical change. The long and the short of it: compute is getting scarcer, and increasingly subject to government protectionism.

The KX View: With KX, not a byte is wasted in memory and code efficiency. For time-series and vector queries, it is 100x more efficient than most platforms, and GPU-free. If you’re not appreciating kdb’s considerable efficiency for data analytics and storage, you will soon.

Takeaway 2: “Publish Your 10 Worst Price to Performance Queries and Your Ten Best, by app, workload, tooling”

A Gartner FinOps specialist highlighted the growing awareness of FinOps amidst escalating and unpredictable cloud costs. He noted that teams had hitherto found it easy to, say, document throughput, latency or performance by SLA, but cost per query principles and analyses are still not yet fully adopted as mainstream.

The KX View: Run the right workloads in the right places, for example take kdb time-series, machine learning and vector search queries to the Snowflake data cloud to deliver efficiency, cost-savings, and, yes, in many cases real waste reduction and energy-related carbon footprint reduction.

Takeaway 3: “Build for the Decentralized Autonomous Organization. It will be with us by 2027”

Daryl Plummer, Distinguished VP Analyst at Gartner, highlighted the decentralized autonomous organizations. He suggested boardrooms and hierarchies will adapt, as organizationally more stakeholders across an organization can and will drive decision-making, their experience enhanced via augmented reality and digital boardrooms. That requires comprehensive distributed insights, including those contributed by AI assistants, for informed democratic decentralized decision-making.

The KX View: With KX, observe all data, via telescope and microscope and, where required, make digital decisions. Quoting one AI-centric KX customer we see “no limits”, to “all conceivable data in the universe” that they could “capture, in real-time”, “to record a ‘continuous stream of truth’,” and ChatGPT-like “ask any question of any dataset at any time, and get the answer instantaneously.”

Takeaway 4: “Study “prompt engineering” to optimise code generation and usable AI assets”

The next discipline for your LinkedIn profile, resume or CV so it was claimed is “prompt engineering”. Already augmenting a whole host of activities from code to art to marketing collateral, the notion – is it a science or art – of prompt engineering is everyone’s new favorite upskill. This partly involves asking and critically reviewing the right questions to get useful, hallucination-free answers. Oh yes, and the education system will need to change to support this mega-trend (I completely agree!).

The KX View: At last week’s KXCon [23], several speakers demonstrated their use of ChatGPT to inform their presentations and code that lay behind their presentations. KX users and developers appear to be prompt engineers by default.

Takeaway 5: “When you meet Data Scientists with (Python) Programming Skills, Hire Them”

Another passionate plea, another articulated by Daryl Plummer. This made me laugh. I and my ilk have advocated this for years. Now that Gartner officially sanctions Python, I feel well and truly part of the establishment and thus feel a little bit sick inside, like I’ve sold out to Mammon. The statement is right, because Pythonic data scientists make the world go around.It is also 10 years too late, but hey, what others thought yesterday, Gartner predicts tomorrow.

The KX View: With Python and PyKX, take your Python research to production with ease. Accelerate research, deploy more data, even real-time data, and drive collaboration across teams.

Takeaway 6: “When right to do so, start with real-time, not batch. It’s too expensive the other way around”

This recommendation came from BWT Alpine’s Head of Data Science & Data Engineering (note the job title, it matters), Sergio Rodrigues. He presented the KX-sponsored session on how “typical concepts of data management just aren’t good enough for the real world, including Formula 1.” He noted that traditional data management was not geared to the time-sensitive use case like theirs, a system of thousands of sensors that happens to make up a fast car. Then there is data coming off of their simulator, wind tunnel and 10 test benches. He suggested, during a vibrant Q&A, that designing the real-time architecture, live or simulated, made their historical and batch analysis much easier to implement. He highlighted success in exploring tyre performance on- and off- the race track, helping select the optimal pit stop, prevent accidents, and protect the car.

The KX View: Traditional data management does not do real-time well It also misses the complexity and nuance of analytics, data science and machine learning like the tyre monitoring. This is why timehouses matter. Real-time and historical are connected through time, so why shouldn’t your data management and analytics be the same?

The Audience View: “Did you know that Alpine F1 cars generate 1,000 datapoints from their sensors per second?! And billions of datapoints per race? Extraordinary challenge to have that volume shown in real-time- and they are doing that with KX”

Takeaway 7: “AI brings state of change to data, and engages with sparsity”

Sergio’s session (takeaway 6) highlighted the difficulties of reconciling traditional data management to time series and data science. This pain got reflected in a later Gartner session bringing together data engineering with AI engineering. The speaker noted that AI comes from a fundamentally mathematical standpoint which looks at change and transformation, which might be predicated on non-existent or sparse data, with data-points sometimes simulated. The warehouse “gold standard” data-set holds value, but AI requires so much more. Your data systems must accommodate where change holds, and monitor and categorize it as such.

The KX View: Worldly-wise practitioners know data is incomplete and transformational, and a pure data warehouse on its own, while holding value, doesn’t alone cut mustard. The flexible, decentralized, adaptive world of AI has to co-exist with, and likely drive, your data management and warehouse strategy.

Takeaway 8: “Offline Feature Stores and Online Feature Stores are Different Architectures.” Except they need not be

I attended a brilliant jargon-busting session explaining feature stores and feature engineering for CDAO leaders. It was delivered by an analyst with good feature engineering experience, a neuroscientist by background. The analyst outlined how the feature store sat between the training process and production including real-time, but also noted a distinction between online (for real-time) and offline (for historical analysis) feature stores. She was right. The analyst didn’t name names, but a common architecture (I’ve worked with some) might include Redis as an online feature store, and something SQL/cloud storage centric like Amazon Sagemaker offline. However, that feels like a false dichotomy, not least because KX can service both types of “feature store” in a single architecture.

The KX View: kdb-centered feature stores and/or dynamic kdb feature engineering can service both real-time and historical feature store type use cases. Recall the timehouse philosophy, both real-time AND historical information organized over time and easily queried in-memory. That means online feature stores can benefit from historical context, and also be queried for offline use. Just today, I saw an instance of a dynamic iterative feature engineering workflow, from feature extraction to live model inference, with direct calls back to the training process (run at speed) in order to recompute key features. Agility in action, no static feature store in sight!

Takeaway 9: “Go headless”

In a session on BI platforms, the relevant analyst explored how Google Looker disrupted the BI market by offering its engine up as an engine to (seemingly) rival platforms, for example Tableau. In this way, they opened up the strengths of their environment to consumers of other environments, allowing for the right tool gets used within a customer-preferred architecture. It’s a great model.

The KX View: “Go headless” extends beyond BI. The KX Data Timehouse and vector processing engine provides 100x production compute and store capabilities to many ecosystems. Beyond powering Tableau and Power BI with massively data informed analytics, KX via PyKX can serve bigger data to and/or ultra-powerful analytics to Jupyter notebooks, Python or PySpark, and/or running optimized time-series/compute engines inside warehouses like Snowflake, and so much more.

Takeaway 10: “Many CDAOs force fit temporal data into a Conventional Data Store. Stop doing that”

Daryl Plummer in his keynote noted that databases like DB2 had temporal columns decades ago. However, as companies increase their utilization of temporal data, temporal columns are simply not enough. He advised building from the ground up to handle temporal data at speed, apply effectively and consistently across all of your projects to get real economic potential change calling out Syneos Health (and their use of KX) for its massively performant AI-powered billion patient simulator.

The KX View: Think of a data timehouse as a temporal data warehouse, with ultra-efficient analytics and unmitigated freedom to deploy anywhere, from real-time at the edge to warehouse. It offers so much more than a temporal column in a database, and with considerably greater (100x) efficiency.

Concluding Observations: Embrace Devs & Data Science, and Infuse Humanity for Better Outcomes

My natural “conference home” is among devs, data scientists, quants and MLOps practitioners, not Gartner, yet I hope the goodness I saw at the Gartner Summit 2023 continues to bridge the many gaps between leaders and innovators. I still saw evidence of divides. One passionate lunchtime exhibitor pitched “mathematical optimization”, tech not labelled “generative AI”, “MLOps” or “Data Science”, yet fundamental to all three plus a plethora of decision science use cases, e.g., manufacturing, trading, portfolio management, operations and scheduling including telco services (as highlighted by their guest speaker from O2) and manufacturing. I saw blank faces across the audience, not understanding how the vendor’s unsexy optimization, linear programming, and prescriptive analytics makes their world tick. The tech is hard, not like simple data warehouse. Sergio from BWT Alpine bridges that gap. As a Head of Data Science and Data Engineering, he looks beyond the store to the maths, the open source innovation, and the need to drive results. so far beyond the warehouse and store. His role depends on time, real-time, simulated time (think wind tunnels) and historical time (playback of prior races), and he’s not alone. Syneos, as referenced by Daryl Plummer, and many other organizations at the summit do appreciate the power of the timehouse.

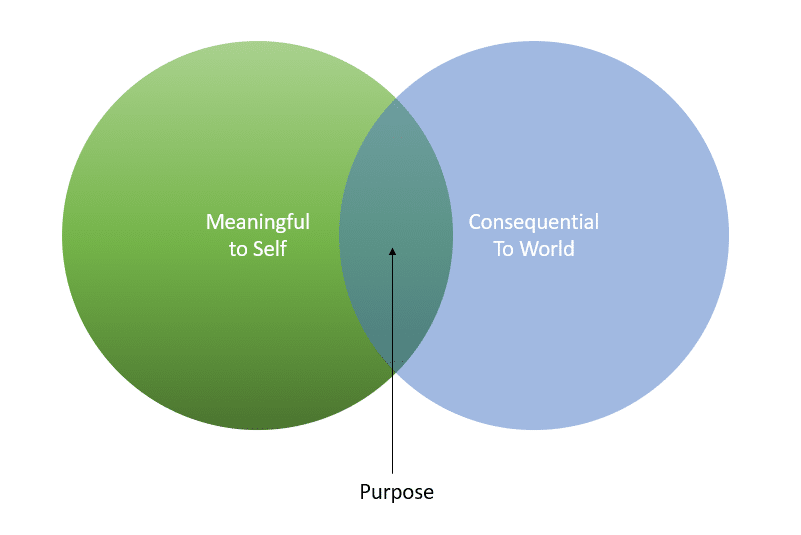

Second, the conference did not forget humanity, indeed it put humanity at the centre of automation, AI and data. One Gartner keynote advocated the importance of the semi spiritual “head, heart and hara”. Elsewhere, an invited futurologist advocated finding (your) purpose as the intersection of meaning to self and being consequential for the world, something we should all strive for. Absolutely right.

Finding Purpose, William Damon (discussed by Angela Oguntala, Greyspace)