Your Report Awaits

Please use this link at any time to access the report “Benchmark Report – High Frequency Data Benchmarking”.

Demo the world’s fastest database for vector, time series and real-time analytics

For over a decade, integrating Python and q has been crucial to the infrastructures of many of our largest clients. Seamlessly combining these technologies provides […]

Discover the secrets of the high-stakes world of capital markets, where trillions of events are processed daily, there’s no room for delay in our latest eBook.

Discover the secrets of the high-stakes world of capital markets, where trillions of events are processed daily, there’s no room for delay in our latest eBook.

Discover the future of data management in Wall Street with our new eBook and dive into the evolution of streaming analytics, time-series data management, and generative AI.

Join Conor McCarthy (VP of Data Science) and Ryan Siegler (Developer Advocate) for a first look at PyKX 3.0

Our new whitepaper identifies the escalation of AI demands and the new challenges they bring to boards, technology providers, and consumers.

Products

kdb+

kdb Insights

KDB.AI

Delta

Sensors

Surveillance

Market Partners

Cloud Service

Data Lake

Market Data

Services & Support

Learn

Documentation

KX Academy

Developer Blog

YouTube Channel

Connect

KX Community

Slack Community

StackOverflow

Events

Build

KX, a global leader in vector-based and time-series data management, has added thousands of members within the KX Community, emphasizing the growing need for developer friendly education and tools that address high-frequency and large-volume data challenges across structured and unstructured data. Spearheaded by KX’s developer advocacy program, the community increases year-to-year since its inception in January 2022. This growth reflects KX’s commitment to supporting both its long-standing Capital Markets customers and the wider developer community, empowering developers to solve complex, real-world problems in building AI and analytics applications. To complement this initiative, KX has launched KXperts, a developer advocacy program that enables members to deepen their involvement and engage in thought leadership, collaboration and innovation.

“The developer experience is a core tenant of KX’s culture. We seek opportunities to deepen our connection with the developer community so we can provide a product and environment that delivers ongoing value,” said Neil Kanungo, VP of Product-Led Growth at KX. “The growth of our member base and the increasing engagement of KXperts illustrates that the developers within the builder community are eager to lead, contribute and mentor. We’re proud to provide an open, collaborative environment where developers can grow and work together to solve business challenges more effectively.”

The KX Community serves as a platform for a wide range of technical professionals, including data engineers, quants and data scientists, to engage in impactful projects and dialogue that support the growth of members, both personally and professionally, as well as influence the broader market. Community members have increasingly leveraged the uniquely elegant q programming language, and, most recently, PyKX, which brings together Python’s data science ecosystem and the power of KX’s time-series analytics and data processing speed. Over 5,000 developers download PyKX daily. The Visual Studio Code extension, that enables programmers to develop and maintain kdb data analytics applications with the popular VS Code IDE, brings a familiar development flow to users, allowing them to adopt KX technology quickly and seamlessly. Combined, these tools improve the accessibility of KX products, making it easier for members to solve complex, real-time data challenges in a multitude of industries, including Capital Markets, Aerospace and Defense, Hi-Tech Manufacturing, and Healthcare.

The newly launched KXperts program is a unique subcommunity of developer experts who are advocates for KX’s tools and technology. Members of the program unlock opportunities to contribute their expertise to technical content development, research, and events, and provide product feedback that informs KX’s research and development initiatives. Members receive the following benefits:

“Those of us within the KXperts program are extremely passionate about the work we do every day and are motivated to further our engagement within the broader developer community,” said Alexander Unterrainer, with DefconQ, a kdb/q education blog. “Since becoming a member, I’ve had the opportunity to participate in speaking engagements alongside the KX team, and share my advice, experience and perspectives with a larger audience than I had access to prior.”

“Since I began my kdb journey, I’ve sought opportunities to share the lessons I’ve learned with others in the community. KX has supported this since day one,” said Jemima Morrish, a junior kdb developer at SRC UK. “Upon becoming a member of KXperts a couple months ago, I’ve been able to refine my channel so that I can serve more like a mentor for other young developers just kickstarting their career journeys. Helping them learn and grow has been the most fulfilling benefit.”

“I have become extremely proficient in the q programming language and kdb+ architecture thanks to the resources available from KX,” said Jesús López-González, VP Research at Habla Computing and a member of KXperts. “I’ve also had the opportunity to produce technical content hosted within the KX platform, and lead meetups, better known as ‘Everything Everywhere All With kdb/q,’ where I’ve contributed guidance to KX implementation and how to use the platform to address common challenges.”

The robust, active community is an added benefit to business leaders who prioritize onboarding technical solutions that support their development teams in streamlined onboarding of technology and continued advancement and proficiency. While the community continues to reach new growth milestones, this marks a significant step forward in the company’s efforts to scale and reach new developers, positioning KX as an increasingly accessible and open platform.

Register for the KX Community, apply for the KXperts program or join the Slack Community to start your involvement with the growing developer member base.

To learn more about all resources available to the developer community, please visit the Developer Center: https://kx.com/developer/

The Insights Portfolio brings the small but mighty kdb+ engine to customers who want to perform real-time streaming and historical data analyses. Available as either an SDK (Software Development Kit) or a fully integrated analytics platform, it helps users make intelligent decisions in some of the world’s most demanding data environments.

In our latest release, Insights 1.11, KX introduces a selection of new feature updates designed to improve the user experience, platform security, and overall query efficiency.

Let’s explore.

We will begin with the user experience updates, which include several new features:

Security enhancements include:

Finally, we have made several feature updates to overall query efficiency, including:

To learn more, visit our latest release notes and explore our free trial options.

BOOK A 30 MIN DEMO

Start your journey to becoming an AI-first enterprise with 100x* more performant data and MLOps pipelines.

kdb Insights Enterprise on Microsoft Azure is ideal for scalable quant research in the cloud and supports SQL and Python with unparalleled speed. Data-driven organizations choosing KX for faster decision making:

To see how kdb performed in independent benchmarks that show similar on replicable data see: TSBS 2023, STAC-M3, DBOps, and Imperial College London Results for High-performance DB benchmarks.

The kdb Insights portfolio brings the small but mighty kdb+ engine to customers wanting to perform real-time analysis of streaming and historical data. Available as either an SDK (Software Development Kit) or fully integrated analytics platform it helps users make intelligent decisions in some of the world’s most demanding data environments.

In our latest update, kdb Insights 1.10, KX have introduced a selection of new features designed to simplify system administration and resource consumption.

Let’s explore.

Working with joins in SQL2: You can now combine multiple tables/dictionaries natively within the kdb Insights query architecture using joins, including INNER, LEFT, RIGHT, FULL, and CROSS.

Learn how to work with joins in SQL2

Implementing standardized auditing: To enhance system security and event accountability, standardized auditing has been introduced. This feature ensures every action is tracked and recorded.

Learn how to implement auditing in kdb Insights

Inject environment variables into packages: Administrators can now inject environment variables into both the database and pipelines at runtime.. Variables can be set globally or per component and are applicable for custom analytics through global settings.

Learn more about packages in kdb Insights

kxi-python now supports publish, query and execution of custom APIs: The Python interface, kxi-python has been extended to allow for publishing and now supports the execution of custom APIs against deployment. This significantly improves efficiency and streamlines workflows.

Learn how to publish, query and execute custom APIs with kxi-python

Publishing to Reliable Transport (RT) using the CLI: Developers can now use kxi-python to publish ad-hoc messages to the Insights database via Reliable Transport. This ensures reliable streaming of messages and replaces legacy tick architectures used in traditional kdb+ applications.

Learn how to publish to Reliable Transport via the CLI

Offsetting subscriptions in Reliable Transport (RT): We’ve introduced the ability for streams to specify offsets within Reliable Transport. This feature reduces consumption and enhances operational efficiency. Alternative Topologies also reduce ingress bandwidth by up to a third.

Learn how to offset streams with Reliable Transport

Monitoring schema conversion progress: Data engineers and developers now have visibility into the schema conversion process. This feature is especially useful for larger data sets, which typically require a considerable time to convert.

Learn how to monitor schema conversion progress

Utalizing getMeta descriptions: getMeta descriptions now include natural language descriptions of tables and columns, enabling users to attach and retrieve detailed descriptions of database structures.

Learn how to utilize getMeta descriptions

Get started with the fastest and most efficient data analytics engine in the cloud.

In addition to these new features, our engineering teams have been busy working to improve existing components. For example: –

To find out more, visit our latest release notes then get started by exploring our free trial options.

Please use this link at any time to access the report “Benchmark Report – High Frequency Data Benchmarking”.

Demo the world’s fastest database for vector, time series and real-time analytics

BOOK A 30 MIN DEMO

Start your journey to becoming an AI-first enterprise with 100x* more performant data and MLOps pipelines.

kdb Insights on AWS is ideal for scalable quant research in the cloud and supports SQL and Python with unparalleled speed. Seamless integration with AWS services, like Lambda, S3, and Redshift, empowers you to create a highly performant data analytics solution built for today’s cloud and hybrid ecosystems. Data-driven organizations choosing KX for faster decision making:

A Verified G2 Leader for Time-Series Vector Databases

4.8/5 Star Rating

To see how kdb performed in independent benchmarks that show similar on replicable data see: TSBS 2023, STAC-M3, DBOps, and Imperial College London Results for High-performance DB benchmarks.

BOOK A 30 MIN DEMO

Start your journey to becoming an AI-first enterprise with 100x* more performant data and MLOps pipelines.

Data-driven organizations trust KX for faster decision-making

To see how kdb performed in independent benchmarks that show similar on replicable data see: TSBS 2023, STAC-M3, DBOps, and Imperial College London Results for High-performance DB benchmarks.

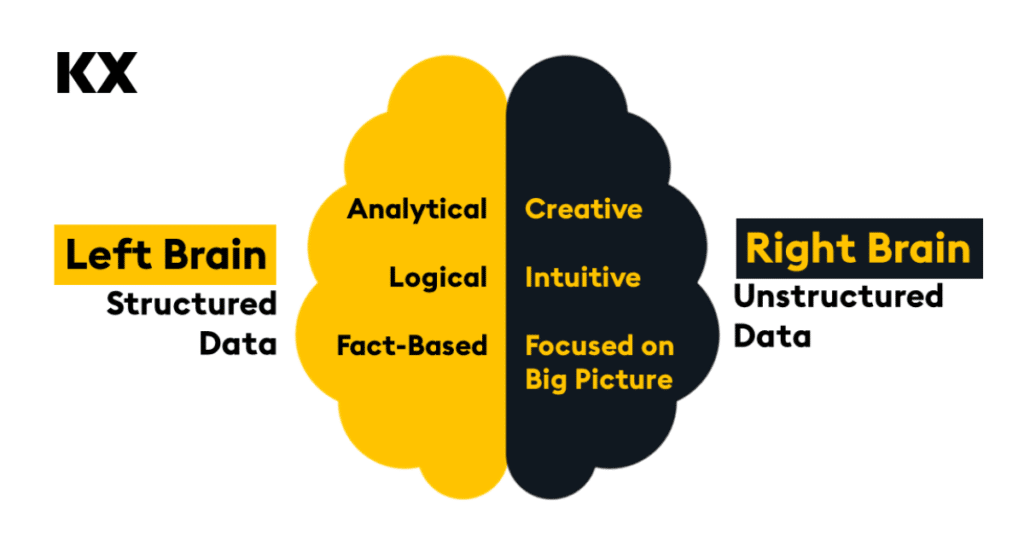

Most technologists view using unstructured data (conversations, text, images, video) and LLMs as a surging wave of technology capabilities. But the truth is, it’s more than that: unstructured data adds an element of surprise and serendipity to using data. It decouples left—and right-brain thinking to improve insight generation and decision-making.

A recent MIT study points to the possibility of elevating analytics in this way. It observed 444 participants performing complex tasks associated with communicating key decisions like an analysis plan by a data scientist about how they plan to explore a dataset. The study found that using GenAI increased speed by 44% and improved quality by 20%. The study shows that analysts, data scientists, and decision-makers of all kinds can use unstructured data and GenAI to elevate decision-making when they use unstructured and structured data.

This form of data-fueled decision-making combines the unstructured data required to power right-brain, creative, intuitive, big-picture thinking—with structured data for left-brain analytical, logical, and fact-based insight to inform balanced decision-making.

Here’s how it works.

Unstructured data powers creative, intuitive, big-picture thinking. Documents and videos are used to tell stories on a stream of consciousness. In contrast to structured data, it’s designed to unfold ideas in a serendipitous flow – a journey from point A to point B, with arcs, turns, and shifts in context.

Navigating unstructured data is similarly serendipitous. It matches how the brain processes fuzzy logic, relationships among ideas, and pattern-matching. The rise of LLMs and generative AI is largely because prompt-based exploration matches how our brains think about the world – you ask questions via prompts, and neural networks predict what might resolve your quandary. Like your brain, neural networks help analyze the big picture, generate new ideas, and connect previously unconnected concepts.

This creative, right-brain computing style is modeled after how our brains work. Warren Mcculloch and Walter Pitts published the seminal paper in 1943 that theorized how computers might mimic our creative brains in A Logical Calculus of the Ideas Immanent in Nervous Activity. In it, they described computing that casts a “net” around data that forms a pattern and sparks creative insight in the human brain. They wrote:

“…Neural events and their relations can be treated using propositional logic. It is found that the behavior of every net can be described in these terms, with the addition of more complicated logical means for nets containing circles, and that for any logical expression satisfying certain conditions, one can find a net behaving in the fashion it describes.”

Eighty years later, neural networks are the foundation of generative AI and machine learning. They create “nets” around data, similar to how humans pose questions. Like the neural pathways in our brain, GenAI uses unstructured data to match patterns.

So, unstructured data provides a new frontier of data exploration, one that complements the creative “nets” that our brains cast naturally over data. But, alone, unstructured data is fuel for our creativity, and it, too, can benefit from some right-brain capabilities. From a data point of view, the right brain is informed by structured data.

Structured data is digested, curated, and correct. The single source of the truth. Structured data is our human attempt to place the world in order and forms the foundation of analytical, logical, fact-based decision-making.

Above all, it must be high-fidelity, clean, secure, and aligned carefully to corporate data structures. Born from the desire to track revenue, costs, and assets, structured data exists to provide an accurate view of physical objects (products, buildings, geography), transactions (purchases, customer interactions, conversations), and companies (employees, reporting hierarchy, distribution networks) and concepts (codes, regulations, and processes). For analytics, structured data is truth serum.

But digested data loses its original fidelity, structure, and serendipity. Yes, structured data shows us that we sold 1,000 units of Widget X last week, but it can’t tell us why customers made those purchasing decisions. It’s not intended to speculate or predict what might happen next. Interpretation is entirely left to the human operator.

By combining access to unstructured and structured data in one place, we gain a new way to combine both the left and right sides brain as we explore data.

This demo explains how our vector database, KDB.AI, works with structured and unstructured data to find similar data across time and meaning, and extend the knowledge of Large Language Models.

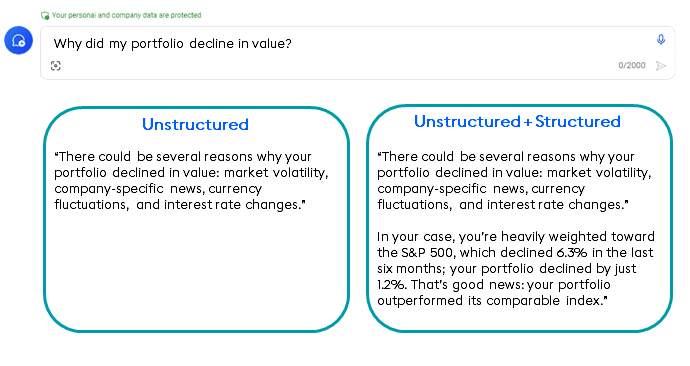

Combining structured and unstructured data marries accuracy with serendipitous discovery for daily judgments. For example, every investor wants to understand why they made or lost money. Generative AI can help answer that data in a generic way (below, left). When we ask unstructured data why our portfolio declined in value, AI uses unstructured data to provide a remarkably good human-based response: general market volatility, company-specific news, and currency fluctuations provide an expansive view of what might have made your portfolio decline in value.

But the problem with unstructured-data-only answers is that they’re generic. Trained on a massive corpus of public data, they supply the most least-common-denominator, generic answers. What we really want to know is why our portfolio declined in value, not an expansive exploration of all the options.

Fusing unstructured data from GenAI with structured data about our portfolios provides the ultimate answer. GenAI, with prompt engineering, interjects the specifics of how your portfolio performed, why your performance varied, and how your choice compared to its comparable index.

The combination of expansive and specific insight is shown in the right column, below:

Bringing left-and-right brain thinking together in one technology backplane is a new, ideal analytical computing model. Creative, yet logical, questions can be asked and answered.

But all of this is harder than it may sound for five reasons.

Unstructured and structured data live on different technology islands: unstructured data on Document Island and structured data on Table Island. Until now, different algorithms, databases, and programming interfaces have been used to process each. Hybrid search builds a bridge between Document and Table Island to make left-and-right brain queries possible.

Hybrid search requires five technical elements:

In our next post, we’ll explore these elements and how they build a bridge between creative and logical data-driven insights. Together, they form a new way of constructing an enterprise data backplane with an AI Factory approach to combine both data types in one hybrid context.

The business possibilities of combining left-and-right brain analytics are as fundamental as the shift in how decision-making works in the context of AI. So, introduce new thinking methods based on new hybrid data technology capabilities for elevated data exploration and decision-making.

Learn how to integrate unstructured and structured data to build scalable Generative AI applications with contextual search at our KDB.AI Learning Hub.

by Steve Wilcockson

Two Stars Collide: Thursday at KX CON [23]

My favorite line that drew audible gasps at the opening day at the packed KX CON [23]

“I don’t work in q, but beautiful beautiful Python” said Erin Stanton of Virtu Financial simply and eloquently. As the q devotees in the audience chuckled, she qualified her statement further “I’m a data scientist. I love Python.”

The q devotees had their moments later however when Pierre Kovalev of the KX Core Team Developer didn’t show Powerpoint, but 14 rounds of q, interactively swapping characters in his code on the fly to demonstrate key language concepts. The audience lapped up the q show, it was brilliant.

Before I return to how Python and kdb/q stars collide, I’ll note the many announcements during the day, which are covered elsewhere and to which I may return in a later blog. They include:

Also, Kevin Webster of Columbia University and Imperial College highlighted the critical role of kdb in price impact work. He referenced many of my favorite price impact academics, many hailing from the great Capital Fund Management (CFM).

Yet the compelling theme throughout Thursday at KX CON [23] was the remarkable blend of the dedicated, hyper-efficient kdb/q and data science creativity offered up by Python.

Erin’s Story

For me, Erin Stanton’s story was absolutely compelling. Her team at broker Virtu Financial had converted a few years back what seemed to be largely static, formulaic SQL applications into meaningful research applications. The new generation of apps was built with Python, kdb behind the scenes serving up clean, consistent data efficiently and quickly.

“For me as a data scientist, a Python app was like Xmas morning. But the secret sauce was kdb underneath. I want clean data for my Python, and I did not have that problem any more. One example, I had a SQL report that took 8 hours. It takes 5 minutes in Python and kdb.”

The Virtu story shows Python/kdb interoperability. Python allows them to express analytics, most notably machine learning models (random forests had more mentions in 30 minutes than I’ve heard in a year working at KX, which was an utter delight! I’ve missed them). Her team could apply their models to data sets amounting to 75k orders a day, in one case 6 million orders over a 4 months data period, an unusual time horizon but one which covered differing market volatilities for training and key feature extraction. They could specify different, shorter time horizons, apply different decision metrics. ”I never have problems pulling the data.” The result: feature engineering for machine learning models that drives better prediction and greater client value. With this, Virtu Financial have been able to “provide machine learning as a service to the buyside… We give them a feature engineering model set relevant to their situation!,” driven by Python, data served up by kdb.

The Highest Frequency Hedge Fund Story

I won’t name the second speaker, but let’s just say they’re leaders on the high-tech algorithmic buy-side. They want Python to exhibit q-level performance. That way, their technical teams can use Python-grade utilities that can deliver real-time event processing and a wealth of analytics. For them, 80 to 100 nodes could process a breathtaking trillion+ events per day, serviced by a sizeable set of Python-led computational engines.

Overcoming the perceived hurdle of expressive yet challenging q at the hedge fund, PyKX bridges Python to the power of kdb/q. Their traders, quant researchers and software engineers could embed kdb+ capabilities to deliver very acceptable performance for the majority of their (interconnected, graph-node implemented) Python-led use cases. With no need for C++ plug-ins, Python controls the program flow. Behind-the-scenes, the process of conversion between NumPy, pandas, arrow and kdb objects is abstracted away.

This is a really powerful use case from a leader in its field, showing how kdb can be embedded directly into Python applications for real-time, ultra-fast analytics and processing.

Alex’s Story

Alex Donohoe of TD Securities took another angle for his exploration of Python & kdb. For one thing, he worked with over-the-counter products (FX and fixed income primarily) which meant “very dirty data compared to equities.” However, the primary impact was to explore how Python and kdb could drive successful collaboration across his teams, from data scientists and engineers to domain experts, sales teams and IT teams.

Alex’s personal story was fascinating. As a physics graduate, he’d reluctantly picked up kdb in a former life, “can’t I just take this data and stick it somewhere else, e.g., MATLAB?”

He stuck with kdb.

“I grew to love it, the cleanliness of the [q] language,” “very elegant for joins” On joining TD, he was forced to go without and worked with Pandas, but he built his ecosystem in such a way that he could integrate with kdb at a later date, which he and his team indeed did. His journey therefore had gone from “not really liking kdb very much at all to really enjoying it, to missing it”, appreciating its ability to handle difficult maths efficiently, for example “you do need a lot of compute to look at flow toxicity.” He learnt that Python could offer interesting signals out of the box including non high-frequency signals, was great for plumbing, yet kdb remained unsurpassed for its number crunching.

Having finally introduced kdb to TD, he’s careful to promote it well and wisely. “I want more kdb so I choose to reduce the barriers to entry.” His teams mostly start with Python, but they move into kdb as the problems hit the kdb sweet spot.

On his kdb and Python journey, he noted some interesting, perhaps surprising, findings. “Python data explorers are not good. I can’t see timestamps. I have to copy & paste to Excel, painfully. Frictions add up quickly.” He felt “kdb data inspection was much better.” From a Java perspective too, he looks forward to mimicking the developmental capabilities of Java when able to use kdb in VS Code.”

Overall, he loved that data engineers, quants and electronic traders could leverage Python, but draw on his kdb developers to further support them. Downstream risk, compliance and sales teams could also more easily derive meaningful insights more quickly, particularly important as they became more data aware wanting to serve themselves.

Thursday at KX CON [23]

The first day of KX CON [23] was brilliant. a great swathe of great announcements, and superb presentations. For me, the highlight was the different stories of how when Python and kdb stars align, magic happens, while the q devotees saw some brilliant q code.

AMERICAS

Tel: +1 (212) 447 6700

EMEA

Tel: +44 (0)28 3025 2242

APAC

Tel: +65 65921960

ANZ

Tel: +61 (0)2 9236 5700

©2025 KX. All Rights Reserved. KX® and kdb+ are registered trademarks of KX Systems, Inc., a subsidiary of FD Technologies plc.