Many AI practitioners unknowingly make a critical mistake: using the “best” embedding models, believing it guarantees top performance. The truth? The best model on paper (or MTEB – Massive Text Embedding Benchmark) might be the worst choice for your specific application.

Here’s why:

Benchmark scores don’t tell the whole story: Leaderboards are great for academic comparisons but miss the nuances of your data and use case. A top model on generic datasets might struggle with your domain-specific challenges.

Bigger isn’t always better: Massive models with billions of parameters are tempting but have higher computational costs, slower inference, and deployment complexities. Do you really need that 7-billion-parameter model when a smaller, efficient one like jina-embeddings-v3 could work just as well—or better? Smaller models are easier to deploy, scale, and integrate.

Overlooking domain specificity: Generic models are trained on broad data and might miss critical nuances in specialized fields like legal, medical, or financial services. Domain-specific or fine-tuned embeddings can significantly outperform general-purpose ones. A quickly fine-tuned tiny embedding model might massively outperform a bulky 7k-dimension model!

Ignoring practical constraints: Resource availability, latency requirements, and scalability are often overshadowed. What’s the use of a state-of-the-art model if it doesn’t fit your deployment constraints or budget?

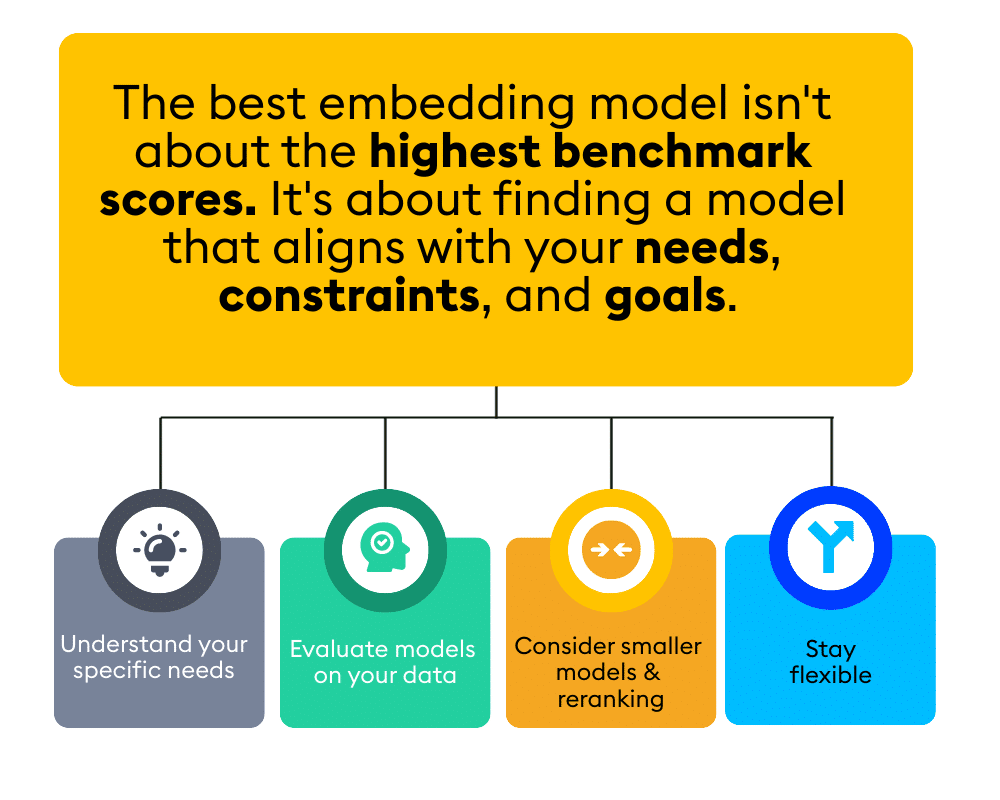

What should you do instead?

Understand your specific needs: Define what you need from an embedding model—semantic search, classification, recommendation? Know your data’s nature.

Evaluate models on your data: Don’t rely solely on benchmarks. Test multiple models on your data to see which performs best. Actually look at the results! Search is challenging. If results are poor, consider fine-tuning, hybrid search, or a better reranker.

Consider smaller models and reranking: Smaller, efficient models combined with reranking can provide comparable performance, reducing costs and improving scalability. Remember: generating embeddings adds latency, and retrieval does too! Without quantizing with an index like IVFPQ, your high-dimension model might slow search times.

Stay flexible: The field evolves rapidly. Adapt and re-evaluate your choices as new models and techniques emerge. Recently, late-interaction models like ColBERT have become powerful reranking strategies.

The bottom line

Choosing the best embedding model isn’t about the highest benchmark scores. It’s about finding a model that aligns with your needs, constraints, and goals. A nuanced approach helps build AI systems that are powerful, efficient, and scalable. I dove deep into this topic, sharing successes and hard-learned lessons in my latest ebook, “The ultimate guide to choosing embedding models for AI applications”. If you’re looking to optimize your AI applications, it’s a resource you won’t want to miss.