ポイント

- GPUs unlock real-time AI for capital markets.

- Hybrid CPU–GPU stacks balance performance and scale.

- LLMs and deep learning run best on GPU infrastructure.

- KX + NVIDIA simplify GPU adoption and reduce risk.

- Start small, scale fast. Pilot use cases prove value.

As AI workloads grow in size and complexity, GPUs offer capital markets firms the compute power needed to go beyond what CPUs alone can deliver.

Capital markets firms are at a pivotal crossroads in infrastructure strategy. CPUs have long underpinned traditional real-time data processing and trading strategies. But the demands of AI, deep learning, and unstructured data analytics are driving growing interest in the selective adoption of GPU-based systems.

This doesn’t mean discarding CPUs. It’s about understanding where GPUs can bring transformative value, and how to integrate them with minimal disruption. In this post, I’ll explore the why, where, and how of GPU adoption in capital markets, and how a new approach from KX and NVIDIA can help you accelerate this transition.

What CPUs still do best in capital markets (and where GPUs now add value)

Financial tech stacks have long relied on CPUs for their cost-effectiveness, power efficiency, and reliability. They’ve proven their worth in use cases such as low-latency real-time data processing and traditional algorithmic workloads, including statistical models, classical machine learning, and high-speed financial computation.

These foundational workloads remain the ‘bread and butter’ of financial operations and are not going away. CPUs alone will continue to anchor such core capital markets workloads. However, with the rise of GenAI, new demands have emerged that benefit significantly from the parallel processing power of GPUs, including:

- Deep learning workflows: Accelerating algorithm training, fine-tuning, and – most critically – inference, are all compute-intensive. GPUs excel here

- Unstructured data processing: Embedding news, filings, and alternative data into actionable insights at scale requires GPU-accelerated vectorization

- Large language models: Hosting LLMs for research automation, sentiment analysis, and conversational interfaces that deliver low-latency results requires GPU infrastructure

Modern GPU infrastructure also enables more advanced capabilities that directly address the performance and precision demands of capital markets:

- Multimodal context synthesis: AI agents can now extract and unify insights from time series data, documents, and visual elements (charts, tables, images) in one step, enabling LLMs to reason across data types and eliminate contradictions at source

- Precision retrieval at scale: With cuVS and CUDA-accelerated pipelines, vector search and time-series analytics can keep pace with petabyte-scale data demands, ensuring decision-ready signals are surfaced in real time without fragment loss or post-hoc stitching

Where GPUs fit in your tech stack and where they don’t

Not every part of your stack needs GPUs. But for specific tasks that let you fully capitalize on the AI era, they are indispensable. The key is to plug them into your architecture where they are most necessary and effective. This is about smart integration, not wholesale replacement.

Often, the value driver will be using AI to augment, enhance, and automate existing traditional processes you’re already using. For example, backtesting a deep-learning trading strategy might combine CPU-hosted market data replay with GPU-accelerated prediction models, enabling rapid iteration on complex scenarios. Or you might plug traditional algorithms into agentic workflows to unlock greater flexibility, intelligence, and insights.

By combining the strengths of CPUs and GPUs, you can layer innovation on top of what already works. Through deliberate, incremental AI adoption, you can future-proof your stack and minimize disruption of legacy systems.

How to combine CPUs and GPUs in a hybrid architecture for AI innovation

The most successful capital markets strategies will emerge from hybrid architectures, utilizing GPUs where AI acceleration is essential and CPUs where traditional performance remains paramount. This approach enables:

- AI-enhanced research and risk workflows

- Backtesting with mixed workloads

- Real-time insights from combined structured and unstructured data

This hybrid model aligns with how firms are looking to integrate GenAI and deep learning without rebuilding entire infrastructures. The result: faster time to insight, stronger performance, and greater flexibility.

Solving GPU adoption challenges

While the benefits are clear, implementation isn’t straightforward. It’s hard to ‘just add GPUs’. Retooling existing infrastructure introduces real challenges:

- Infrastructure complexity: GPU integration often requires changes to data pipelines, storage, and memory bandwidth

- Workflow redesign: Models often need to be rearchitected to leverage GPUs effectively – this is not a simple ‘lift and shift’

- ROI uncertainty: GPUs are expensive. Without clear use cases, investments can rack up without immediate returns

- Safe deployment at scale: Generating insights is only half the battle. Getting them into live trading, risk, or research workflows, at speed, with governance and consistency, is where most firms struggle

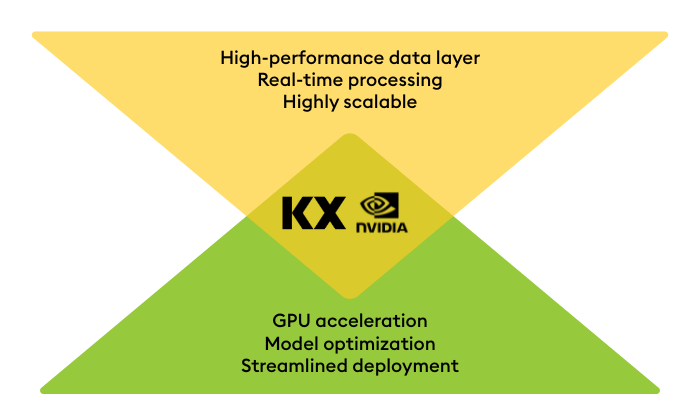

We understand these pain points and are acutely aware of the bottlenecks in GenAI adoption. That’s why we partnered with NVIDIA to combine our high-performance analytical database with NVIDIA’s powerful GPU acceleration and AI ecosystem. This unified, AI factory approach tackles the infrastructure-heavy lifting associated with AI adoption, reducing reliance on a patchwork of fragmented solutions and accelerating time to value.

With the KX and NVIDIA AI Factory, you get immediate access to:

- GPU-powered infrastructure: No need to build your own from scratch. Skip the integration pain so you can focus on what you do best

- Blueprints for proven use cases: Hit the ground running with repeatable examples, such as equity or quant research assistants trained on millions of vectors of unstructured enterprise-level data

- KX-native speed and integration: Turbocharge workloads by combining the power of NVIDIA GPUs with the proven performance and time-aware capabilities of KDB.AI

The KX and NVIDIA AI factory

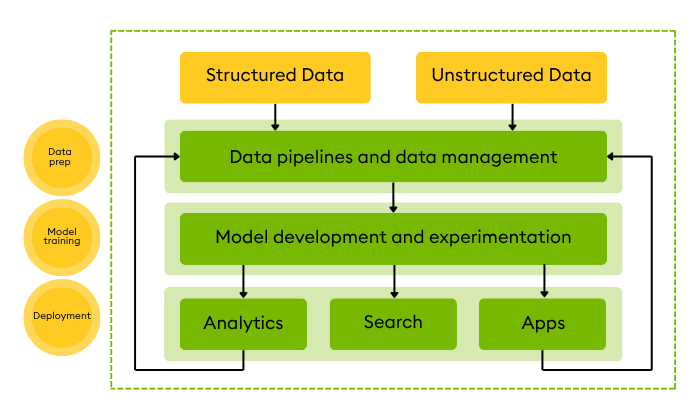

Building faster with KX and NVIDIA

Using the AI factory approach significantly reduces disruption, cost, and time-to-value when integrating GenAI into your workflows. You’ll be able to:

- Search unstructured and structured data to find the most relevant insights

- Rapidly calculate new information – volatility, moving averages, statistical models – with kdb and q core technology

- Apply deep learning to this data to predict new outcomes

All with one unified, enterprise-ready platform designed to deliver results—fast.

How to start your GPU adoption journey with confidence

Your GPU strategy should reflect where you are in your AI journey. If you’re experimenting, stick with CPU-optimized environments. But if you’re ready to scale use cases like research automation, surveillance, or deep learning-based signal generation—especially when unstructured data is involved, GPUs are essential.

Start with a focused pilot that can prove value fast and scale into production under real-world latency, governance, and ROI constraints. That’s where KX and NVIDIA come in, helping you move from exploratory to executable without the usual infrastructure headaches.

Building an AI-ready infrastructure doesn’t have to be disruptive. Explore how we are working with NVIDIA to help firms pilot and scale GPU-powered workloads and move beyond AI experimentation with infrastructure built for speed, scale, and real-time.