Key Takeaways

- TSFMs are sophisticated AI models pre-trained on massive amounts of diverse, real-world time series data.

- TSFMs leverage pre-existing knowledge to generate accurate forecasts on new data with minimal or no additional training.

- TSFMs provide deep insights, capturing hidden trends, subtle seasonality, and complex interactions.

- TSFMs provide rapid forecasting and scenario analysis for finance, aerospace & defense, retail, energy, IT operations, and manufacturing.

- Most TSFMs leverage a transformer architecture with self-attention, weighing the importance of different data points regardless of their position in the sequence.

Time series foundation models (TSFMs) rapidly change how we handle and predict time-stamped data, enabling more accurate forecasts and better data-driven decisions across industries. Whether predicting aircraft component failure rates for preventive maintenance, forecasting market volatility during economic uncertainty, or optimizing power grid load balancing during peak demand periods, TSFMs provide the power, scalability, and efficiency needed to maximize insights from temporal data.

What is a time series foundation model (TSFM)?

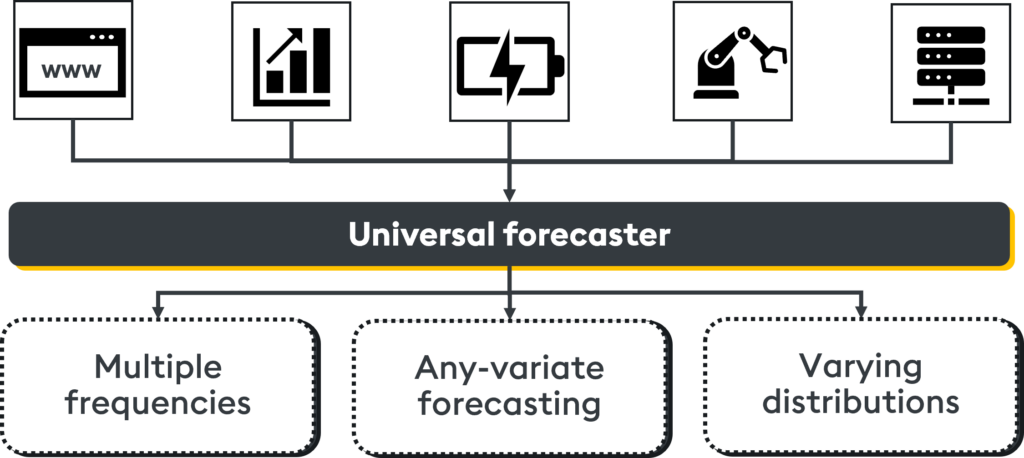

A time series foundation model (TSFM) is a sophisticated AI model pre-trained on massive amounts of diverse, real-world time series data. It learns generalized patterns, seasonality, trends, and complex temporal dynamics inherent across varied datasets by processing billions of data points to create a broad, foundational knowledge base.

TSFMs fundamentally change traditional forecasting approaches, which have historically built small, isolated forecast models for every new dataset or specific task. Instead, users can leverage the TSFM’s pre-existing knowledge and generate accurate forecasts on new data with minimal or no additional training.

Benefits and differentiators:

- Rapid forecasting & scenario analysis: Enable quick generation of forecasts to run “what-if” scenarios efficiently

- Scalability: Manage hundreds or thousands of time series datasets concurrently

- Deeper insights: Capture hidden trends, subtle seasonality, and complex interactions, often missed by older methods

- Reduced training: Minimum additional training (fine-tuning) when compared to developing bespoke models

- Efficiency & speed: Substantial time savings in model development, deployment, and maintenance. Accelerate time-to-insight with less data and expertise

What are transformers?

Most leading TSFMs leverage a transformer architecture, a neural network initially developed for language tasks, including machine translation. Unlike older methods that process sequences step-by-step, transformers use self-attention, allowing the model to process an entire sequence simultaneously and weigh the importance of different data points relative to each other, regardless of their position in the sequence.

When applied to time series, self-attention allows a TSFM to look at the entire historical sequence, whether it spans days, months, or years, and determine which points matter most for predicting the future.

This enables the model to identify effectively:

- Recurring patterns: Identify cycles such as daily peaks or weekly variation

- Complex seasonality: Recognize longer-term trends such as yearly cycles or holiday impact

- Sudden shifts: Detect anomalies, structural breaks, or significant changes in data behavior

- Long-range dependencies: Understand how long-range data points and events influence future outcomes

By viewing the sequence as a whole, transformers can capture complex temporal dynamics more accurately than methods that progress sequentially. This ability to generalize across diverse patterns makes them a natural fit for building foundation models that work across many forecasting tasks.

Alternative architectures

While Transformers such as Google TimesFM and Amazon Chronos form the backbone of many prominent TSFMs, they are not the only approach. IBM’s tiny time mixers (TTM) utilize an MLP-based (multi-layer perceptron) architecture that eliminates the attention mechanism for fully connected layers within a patch-mixer structure, resulting in a significantly lightweight model (<1M parameters) with strong performance in zero-shot scenarios.

Training data requirements

A critical aspect of building effective TSFMs is the need for massive, diverse, time-stamped data for pre-training. Unlike language or vision models that can leverage vast amounts of publicly available data, high-quality time series data across various domains (finance, IoT, retail, etc.) is often proprietary. Fortunately, resources such as the Monash Time Series Forecasting Repository have been compiled to address this.

Leading models have also been trained on enormous scales; Google’s TimesFM, for example, was pre-trained on a corpus of 100 billion real-world time points from sources such as Google Trends and Wikipedia, while Datadog’s Toto uses 1 trillion observability data points.

Zero-shot vs few-shot forecasting

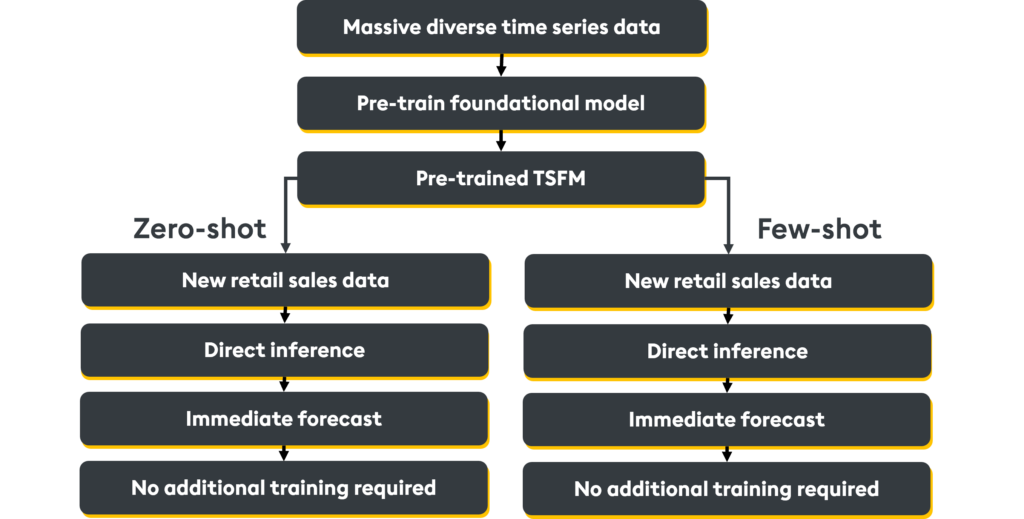

Once pre-trained, TSFM forecasting can be leveraged through either zero-shot or few-shot forecasting:

Zero-shot forecasting (instant prediction)

Zero-shot forecasting involves applying the pre-trained TSFM directly to a brand-new dataset with zero additional training or fine-tuning. The model relies purely on its generalized knowledge and “lived experience” acquired during its extensive pre-training phase.

How it works: It identifies patterns in the new data that resemble contexts seen during pre-training and makes an informed prediction based on experience.

When to use:

- Rapid deployment: When speed to prediction is the top priority

- Baseline forecasts: Establish initial performance benchmarks quickly

- Scalability: Manage and forecast numerous time series concurrently

- Data scarcity: When target-specific training data for fine-tuning is unavailable

Few-shot forecasting (targeted adaptation)

Few-shot forecasting involves minimal fine-tuning by training the pre-trained model on a small batch of examples specific to the target dataset or task. This allows the model to adapt its general knowledge to the new scenario’s unique details, unusual patterns, or particular nuances.

How it works: Learning from a small batch of relevant examples, the model adjusts its internal parameters to match the specific context better.

When to use:

- Higher accuracy: For domains where predictive performance is essential

- Tuning data available: When a small but relevant dataset exists for fine-tuning

- Unique data characteristics: To adapt the model to specific patterns or behaviors not adequately represented by the general pre-trained data

Trade off

The choice between these methods depends on your specific needs: Zero-shot offers significant speed and ease for broad applications, while few-shot provides a path to enhanced accuracy for specific, critical tasks with a modest investment in fine-tuning.

TSFM considerations

The TSFM landscape is rapidly evolving, with several prominent models available, each embodying different design philosophies and strengths. Understanding these options is key to selecting the right tool for your needs.

- Chronos (Amazon): Built on a language model architecture, trained on billions of tokenized time series points. Known for strong zero-shot performance and good probabilistic forecasting capabilities (predicting ranges)

- TimesFM (Google): A decoder-only transformer model. Uses patching (treating time windows as tokens) for efficiency, especially in long-horizon forecasting

- Tiny time mixers (TTM) (IBM): MLP-based, lightweight model (<1M parameters) that forgoes attention mechanisms. Excels in zero-shot forecasting, sometimes outperforming larger models

- TimeGPT (Nixtla): A commercial service accessed via API that offers unprecedented accuracy and ease of use, particularly for zero-shot forecasting. Significantly outperforms traditional ML methods on some datasets

- Toto (Datadog): State-of-the-art transformer model specifically tuned for observability metrics. Trained on an unprecedented 1 trillion domain-specific data points with proportional factorized space-time attention

How do I choose the right TSFM?

Selecting the best TSFM depends on your requirements, resources, and workflow preferences.

- Deployment model & ease of use: Do you prefer open-source models for self-hosting and customization (Chronos, TimesFM, TTM) or a managed API for simplicity and rapid results (TimeGPT)? Are compute resources limited, favoring lightweight options (TTM)?

- Performance & accuracy: How critical is state-of-the-art accuracy? Is strong zero-shot performance sufficient, or is the ability to few-shot tune essential for your specific datasets? How robust does the model need to be?

- Specific features: Do you need probabilistic outputs (forecast ranges)? Are you simultaneously forecasting single (univariate) or multiple (multivariate) metrics? Do you need to incorporate external factors (exogenous variables)?

- Control vs convenience: Do you value complete control over the model and its deployment or the convenience of a ready-to-use API?

- Validation path: Always start with a quick zero-shot test using your own data to gauge a model’s baseline performance and suitability. If accuracy isn’t sufficient, explore few-shot fine-tuning

- Keep current: The field is moving fast. Monitor resources like Hugging Face and academic repositories (arXiv) for new model releases, updates, and benchmark results

What are some TSFM use cases?

TSFMs are invaluable across industries where understanding and predicting temporal patterns is crucial for operational efficiency and strategic planning.

- Finance: Forecasting market volatility and liquidity conditions, optimizing trading strategies based on historical patterns, enhancing risk management through more accurate value at risk (VaR) modeling, detecting anomalous transaction patterns for fraud prevention, predicting cash flow needs across treasury operations, and improving credit risk assessment through time-based behavioral analysis

- Aerospace & Defense: Optimizing aircraft maintenance schedules through component failure prediction, forecasting mission-critical parts demand to maintain readiness, analyzing sensor data from flight systems to predict potential issues, optimizing fuel consumption based on flight patterns and conditions, and enhancing satellite operations through orbital pattern analysis

- Retail & e-commerce: Enhancing demand forecasting (especially for seasonal peaks/troughs), optimizing inventory management to reduce stockouts and overstocking, analyzing promotion effectiveness, and predicting website traffic for product launches and sales events

- Energy & utilities: Improving energy load forecasting for grid stability, balancing supply and demand by predicting consumption patterns, forecasting output from renewable energy sources (solar, wind), and optimizing resource dispatch and allocation

- IT operations & monitoring: Predicting server load and CPU usage to prevent outages, forecasting network traffic peaks for resource provisioning, and enabling proactive anomaly detection in system logs

- Manufacturing & IoT: Enabling predictive maintenance by analyzing sensor readings to anticipate equipment failures, optimizing complex production schedules, and improving supply chain forecasting based on real-time operational data.

TSFM FAQs

What is a time series foundation model (TSFM)?

A TSFM is an AI model pre-trained on vast amounts of diverse time series data, enabling generalized forecasting across new datasets, often with minimal or no additional training.

How do TSFMs differ from traditional forecasting models?

TSFMs leverage broad, pre-trained knowledge to generalize across tasks and datasets, whereas traditional models are typically built from scratch for specific datasets and require significant task-specific tuning.

Why are transformers often used in TSFMs?

Transformers employ self-attention mechanisms that excel at capturing complex patterns, seasonality, and long-range dependencies across entire time series sequences, making them highly effective for temporal data.

What is zero-shot forecasting?

This involves applying a pre-trained TSFM directly to make predictions on new, unseen data without additional training or fine-tuning on that specific data.

What is few-shot forecasting?

This involves minimal fine-tuning of a pre-trained TSFM using a small number of examples from the target dataset to adapt the model to specific nuances or patterns.

What are the main benefits of using a TSFM?

Key benefits include high accuracy with less data and effort, enabling rapid deployment and adaptability, handling numerous time series simultaneously, and uncovering complex patterns potentially missed by traditional methods.