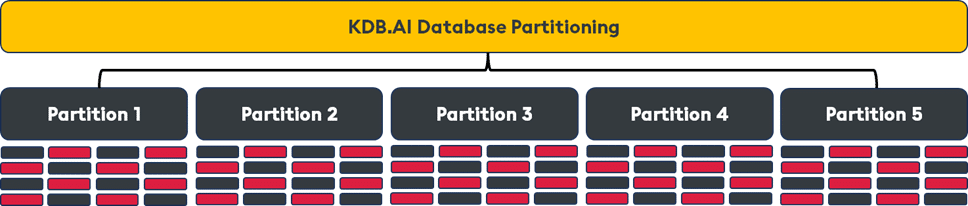

Analyzing massive volumes of unstructured and structured data requires a scalable, high-performance vector database. KDB.AI has emerged as a robust solution for such tasks due to partitioning—an essential capability that allows the KDB.AI to scale without losing performance.

Let’s take a closer look at KDB.AI’s partitioning feature, examining how it easily enables users to store, manage, and query massive datasets. We’ll also explore how the partitioning mechanism works, the benefits for large-scale applications, and the types of metadata columns that can be used for partitioning.

Why partitioning matters

At its core, partitioning is a data organization technique that involves splitting a large dataset into smaller, more manageable subsets. Each partition is stored separately, which reduces the computational complexity of search operations by limiting the amount of data searched at any given time. Partitioning becomes necessary when handling the exponential growth in the volume of vectors and the metadata accompanying them.

High-dimensional data points used in various machine learning and artificial intelligence tasks require significant data ingestion, retrieval, and analysis. Without an efficient partitioning strategy, querying and searching at this scale would be unrealistic, time-consuming, and computationally expensive.

However, by partitioning with KDB.AI, users can benefit from:

- Faster queries: Since searches are performed only on the most relevant partitions, query execution times are reduced

- Improved scalability: Partitioning allows the database to scale, seamlessly handling large-scale enterprise deployments

KDB.AI allows users to partition their vector data based on metadata columns, which serve as a key organizing factor. These metadata columns can be of three main types:

- Date: Useful when the data has a temporal aspect, such as time-series data

- Integer: Applied when data has numerical identifiers, rankings, or discrete values

- Symbol: Useful for categorical data, such as tickers, tags, classifications, or entity identifiers

Partitioning these metadata columns gives users significant flexibility in organizing datasets based on the most relevant features. For instance, partitioning on the “date” column can result in more efficient querying over specific time periods. Similarly, if the dataset contains multiple categories or stock tickers, partitioning on the “symbol” column allows users to focus their search on specific symbols.

Partitioning across multiple index types

KDB.AI supports partitioning across several index types, making it versatile for numerous use cases.

- Flat: Stores all vectors in a single set. It is straightforward but may not be optimal for large-scale datasets

- qFlat: An on-disk version of the flat index, perfect for memory-constrained environments

- HNSW (Hierarchical Navigable Small World): A graph-based index that excels at fast approximate nearest neighbor searches, especially for large-scale datasets

- qHNSW: An on-disk variant of HNSW tailored for enhanced performance upon large datasets where storing all vectors in memory would be cost-prohibitive. It is also good for memory-constrained environments

- Sparse: An index designed for keyword search with the BM25 algorithm

- Temporal Similarity Search (TSS): Optimized for time-series data, allowing users to search for patterns, trends, and anomalies over time

Users can apply partitioning across any of these index types, enabling them to tailor search and retrieval capabilities to their specific needs. For example, in time-series analysis, users could combine TSS with partitioning on a “date” column to identify trends or anomalies in specific time periods.

Searching across partitions with filters

KDB.AI allows users to search partitions using metadata and fuzzy filters. This enables targeted searches based on a table’s metadata columns. For example, a user could filter a search query to focus only on data that matches a particular symbol value, significantly narrowing the search scope and improving query performance.

In addition to supporting partitioning on local KDB.AI tables, KDB.AI extends its partitioning capabilities to external kdb+ HDBs. This enables users to apply the same high-performance partitioning strategies to their kdb+ data, ensuring that they can benefit from KDB.AI’s advanced capabilities even when working with massive HDBs.

By enabling partitioning on metadata columns, supporting various index types, and facilitating high-speed parallel ingestion, KDB.AI ensures that users can scale AI workloads across structured time series or vector representations of unstructured data, allowing users to maximize the performance and efficiency of their vector search operations for enterprise-level AI and machine learning applications.

For more information on scaling your vector search and other feature updates, check out our latest release notes, then begin your journey by signing up for a free trial of KDB.AI.