Ensuring data quality for industrial systems and applications

In today’s data-driven world, it has become essential for companies using IoT technologies to address the challenges posed by exploding sensor data volumes. However, the sheer scale of data being produced by tens of thousands of sensors on individual machines is outpacing the capabilities of most industrial companies to keep up.

According to a survey by McKinsey, companies are using a fraction of the sensor data they collect. For example, in one case, managers at a gas rig interviewed for the survey said they used only one percent of data generated by their ship’s 30,000 sensors when making decisions about maintenance planning. At the same time, McKinsey found serious capability gaps that could limit an enterprise’s IoT potential. In particular, many companies in the IoT space are struggling with data extraction, management, and analysis.

Timely, accurate data is critical to provide the right information at the right time for business operations to detect anomalies, make predictions and learn from the past. Without good quality data, companies hurt their bottom line. Faulty operational data can have negative implications throughout a business, hurting performance on a range of activities from plant safety to product quality to order fulfillment. Bad data has also been responsible for major production and/or service disruptions in some industries.

Data quality challenges

Sensor data quality issues have been a long-standing problem in many industries. For utilities, who collect sensor data from electric, water, gas, and smart meters, the process for maintaining sensor data quality is called “validation, estimation and editing,” or VEE. It is an approach that can be used as a model for other industries as well when looking to ensure data quality from high volume, high-velocity sensor data streams.

Today, business processes and operations are increasingly dependent on data from sensors, but the traditional approach of periodic sampling for inspecting data quality are no longer sufficient. Conditions can change so rapidly that anomalies and deviations may not be detected in time with traditional techniques.

Causes of bad data quality include:

- Environmental conditions – vibration, temperature, pressure or moisture – that can impact the accuracy of measurements and operations of asset/sensors.

- Misconfigurations, miscalibrations or other types of malfunctions of asset/sensors.

- Different manufacturers and configurations of sensors deliver different measurements.

- Loss of connectivity interrupts the transmission of measurements for processing and analysis.

- Tampering of sensor/device and data in transit, leading to incorrect or missing measurements.

- Loss of accurate time measurement because of the use of different clocks, for example.

- Out-of-order, or delayed, data capture and receipt.

Validation, Estimation, and Editing (VEE)

The goal of VEE is to detect and correct anomalies in data before it is used for processing, analysis, reporting or decision-making. As sensor measurement frequencies increase and are automated, VEE is expected to be performed on a real-time basis to support thousands of millions of sensor readings per second from hundreds of millions of sensors.

The challenge for companies seeking actionable operational and business knowledge from their large scale sensor installations is that they are not able to keep up with the VEE processes needed to support data analytics systems because of the high volume and velocity of data. This is why so many companies today are using less than 10% of the sensor data they collect.

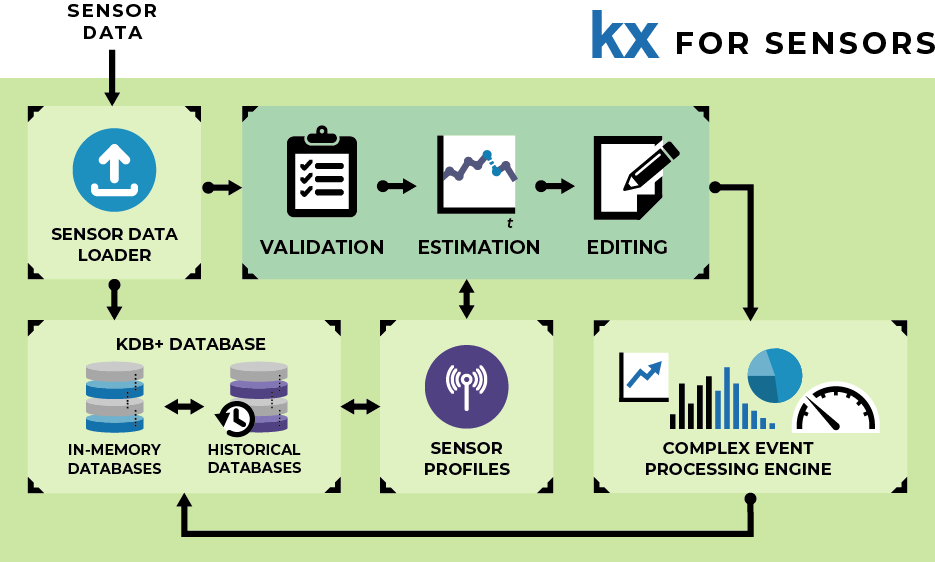

To combat VEE system overload, KX for Sensors has been developed as an integrated VEE and analytics solution for ingesting, validating, estimating and analyzing massive amounts of streaming, real-time and historical data from sensors, devices, and other data sources. It is a single platform for sensor data processing, storage, and analytics.

Powered by a bitemporal data model with rich analytics, KX for Sensors supports any sensor and frequency, tags and attributes with nanosecond precision. It scales to support millions of sensors, and trillions of records and is optimized for event processing, calculations, aggregations, alerting and reporting.

Ensuring data quality

The goal of all data quality frameworks is to detect and correct anomalies in data before using it in analysis, reporting or decision-making processes. While VEE focuses predominantly on the correction side, a robust implementation that supports both real-time and retrospective analytics may assist in detection too. This may be either in root-cause analysis to identify, say, large fluctuations in measurements from defined parameters that suggest component failure or in unexplained deviations from normal patterns that may signify environmental issues or perhaps a more insidious cyber threat that complementary analytics may investigate further.

The VEE process is therefore applied to incoming data so that the data can be certified and used in analytics. Due to the volume and velocity of sensor data streams in Industrie 4.0, VEE needs to be performed on a real-time basis.

So what are some of the techniques for ensuring sensor data quality and availability for industrial systems?

- Capturing quality and other flags.

- Validation

- Verifying data authorization.

- Verifying data completeness.

- Verifying data reasonableness.

- Estimation and Editing

- Adjust the data according to configuration rules.

- Logging and versioning of data and adjustments.

Data pipeline for sensor validation and estimation.

The values associated with an initial sensor reading can change as a result of the VEE process. Accordingly, all steps in the VEE process must be audited, and historical values maintained so there is traceability from new values, compared to those currently in the system. This is important because some analytics and decisions based on data can only be performed on valid actual data while others can use estimated data.

Validation

Validation checks that the incoming data meets expected standards and is within anticipated tolerances, using a configurable series of rules and properties. This includes performing checks on the syntactic correctness of data received; verifying that transmitting organizations are known to the system and making sure that incoming data correlates with configurations and other information in the system. Ideally, validation should:

- Detect every data gap or anomaly.

- Provide detections in real time.

- Correctly flag exceptions that may require some sort of action.

- Automatically adapt to changing usage patterns.

KX for Sensors’ validation checks can detect data errors or conditions arising from a number of scenarios, including improper sensor operation, readings that exceed or fall below predefined variances or tolerances, inactivity, and hardware or software malfunctions. For example, the “flag check” tests conditions pertaining to sensor state, including a general error check, test mode check, time change check, sensor diagnostic check, power interruption check, and partial interval check.

Another technique for ensuring the quality of data is to have redundant measurement devices and mechanisms for determining which is the accurate measurement and handling of differences across two or more measurement devices. This involves comparing sensor data from multiple sensors and determining which sensor readings to use, or using some formula, like estimating the average, to derive a reading to be used.

Estimation and Editing

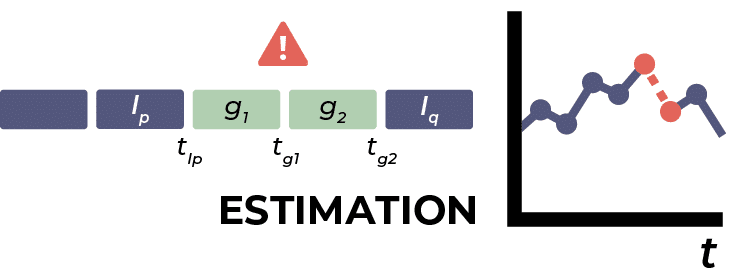

For applications that need data for analysis or billing, even when data quality is suspect or missing, the data will need to be estimated or edited. Estimation is where values of missing, or invalid, readings are created according to a configurable set of rules and properties. Editing is used when manual verification and editing of certain readings is required. It is used in those rare cases where missing or invalid data cannot automatically be corrected, for example when there is insufficient data upon which an estimate can be based.

Kx for Sensors implements a number of estimation algorithms that permit the fair and manageable handling of missing data under the many real-world circumstances that can arise. These algorithms include estimation using a constant value; point-to-point linear interpolation; historical estimation and profile estimation using an expected pattern of sensor data. In addition, in certain situations, such as when quantities are out-of-tolerance, readings can be transitioned to an exception state whereby they require manual intervention to resolve.

For example, we illustrate estimation using the linear estimation algorithm. During validation, when a reading gap is detected it is labeled between readings Ip and Iq for time intervals tg1 and tg2. The linear estimation algorithm essentially draws a straight line between Ip and Iq and calculates g1 and g2 to fit on that line (e.g. if Ip = 5 and Iq = 8 then g1 and g2 would be calculated as 6 and 7)

Conclusion

VEE presents a model for best practice checks and corrections of data that can be implemented for all IoT applications. From utilities to Industry 4.0, the problem of building and implementing Industrial IoT systems in an era of massive amounts of sensor data starts first with validating, estimation and editing the data. Anomalies need to be flagged, errors need to be fixed, and all changes need to be fully traceable.

New tools are needed for this new era of digital information overload, like KX for Sensors. Its rich real-time analytics presents a significant opportunity for optimizing business operations, customer engagement, and offering new services and products. In addition to data analysis and management, it has been proven to provide measurably faster and more efficient results for fault detection, settlement and reconciliation, data marts and surveillance and monitoring of data.