Time Series Database

TIME SERIES DATA IS EVERYWHERE

ENABLING ORGANIZATIONS TO MAKE BETTER DECISIONS

‘Time Series’ is not new. It has long been at the heart of commerce – most notably in the form of Financial market price ticks, but it is now equally widespread across industry and has evolved from minutes and seconds – to nano and milliseconds.

The connected world expands exponentially every year. Over the last ten years, we have experienced growth in the volume and velocity of data from ever-expanding cloud infrastructure, the emergence of 5G, and the rapid adoption of AI and machine learning across market trading platforms, smart meters, machine tools, and a myriad of IoT applications.

Fortunately, the constant stream of human and machine-generated data can now be captured and analyzed faster than ever before.

Businesses need to understand how to efficiently store and query the data and evaluate what platforms and analytic tools can help deliver the best-informed insights. More importantly, there’s an opportunity to use time-series data to make better-informed decisions and drive real business benefits.

KX – PURPOSE BUILT FOR TIME SERIES DATA

“When it comes to time series data, you want to be using a purpose-built time series platform to handle your data streams. You need infinite scalability, particularly if you’re deploying on the cloud”.

Watch as Kathy Schnieder and James Corcoran from KX join Mike Matchett from TruthinIT in a discussion about time series data and the KX tools that accelerate adoption.

WHAT IS A TIME SERIES DATABASE?

POWERFULLY MANAGE BIG DATA FOR TIMELY INSIGHTS

Time series databases are specifically designed to capture and handle time-stamped data.

They can deliver significant improvements in storage space and performance over general-purpose databases and have the following key features:

- Optimized for time-based storage and retrieval.

- Every entry has a time stamp.

- Data gets appended rather than changed in situ.

- Different data sets can be associated by ‘time.’

Why does time series database matter?

Time Series databases support improved, and faster decision-making based on what is occurring now, what happened in the past, and what is predicted to occur in the future.

A time series database can help:

- Automate applications and systems

- Deliver real-time and predictive analytics

- Provide operational intelligence across processes

Many businesses look at their big data applications purely through an “architecture” lens and miss the vital point that the unifying factor across all data is its dependency on time. For big data applications, the ability to capture and factor in “time” is the key to unlocking value.

Data exists and changes – in real-time, earlier today or further in the past. Time series databases are highly performant, scalable, and usable ways to handle exploding data sizes. They offer specialized features not supported in standard SQL and no-SQL languages and can enable your team to capture and perform the analysis required to support ML and AI models.

Time series datasets, databases and analytics can deliver a meaningful snapshot of your business – from any specific point in time.

Each piece of data exists and changes in real-time, earlier today or further in the past. However, unless they are all linked together in a way that firms can analyze, there is no way of providing a meaningful overview of the business at any specific point in time.

Some firms have struggled to introduce this due to the limitations of their technology. Some architectures don’t allow for time-based analysis and struggle with recording and querying it in a highly efficient way. It’s a common issue resulting from opting for a seemingly safe technology choice. It’s also one of the reasons why over the past 24 months that time-series databases (TSDBs) have emerged from software developer usage patterns as one of the fastest-growing categories of databases.

TREND OF THE LAST 12 MONTHS

WHY SHOULD I USE THE KDB+ TIME SERIES DATABASE?

KDB+ IS A TIME SERIES DATABASE OPTIMIZED FOR BIG DATA ANALYTICS

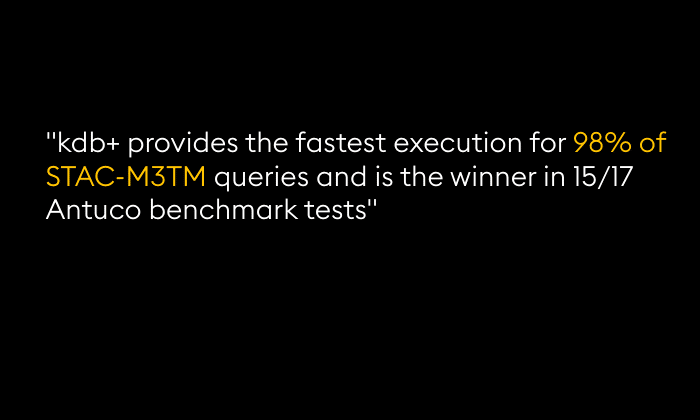

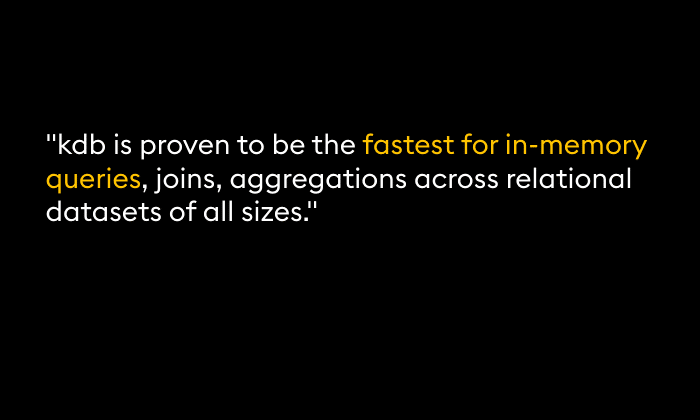

Kdb+, which underpins all KX solutions, is the world’s fastest and most efficient time series database and analytics engine, designed from the outset for high-performance, high-volume data processing and analysis. The columnar design of kdb+ and use of the same in-memory architecture for both real-time and historical data means it offers greater speed and efficiency than typical relational databases – read about our latest STAC-M3 Financial Services Benchmarking here. And its native support for time-series operations vastly improves both the speed and performance of queries, aggregation, and analysis of structured data.

Kdb+ is also different from other popular databases because it:

- can correlate streaming data with historical datasets to deliver contextual insights – in real-time.

- has a built-in array language, q, allowing it to operate directly on the data in the database, removing the need to export data to other applications for analysis.

- supports both time series and relational datasets, enabling joins and analysis across time series, referential, and meta data.

- fully uses the intrinsic power of modern multi-core hardware architectures and supports many interfaces with a simple API for easy connectivity to external graphical, reporting, and legacy systems.

For applications and critical decisions that require continuous and contextual intelligence, kdb+ accelerates your speed to business value. To experience the kdb+ difference, watch the demo, try the 12-month free kdb+ Personal Edition, or speak with our sales colleagues today.

Demo kdb, the fastest time-series data analytics engine in the cloud

WHY TIME SERIES INSTEAD OF A TRADITIONAL DATABASE?

Time Series – from KX – is significantly faster and can effectively deliver “context”.

Combining multi-layered solutions using different tools adds complexity and cost – and falls short on scale and usability. In many cases, the datasets will live on various platforms – such as a traditional database for historical data, in-memory for recent data, and event processing software for real-time data. Traditional databases cannot span multiple domains effectively with acceptable performance.

The answer is one unifying piece of architecture.

All data is, in some way, time-dependent. By unifying the underlying technology, businesses can move beyond simply storing and analyzing a subset of data to making insightful correlations across the company – and delivering significant cost-benefit.

It’s all about time.

Time really does mean money – so preserve your software and RDBMS technology investment while addressing the performance, scale, and cost challenges of working with high data volumes. Being able to implement alongside existing systems and processes is one reason why KX is the go-to time series database and analytics engine for the most ambitious organizations on the planet.

High-Frequency Data Benchmarks for kdb+

STAC-M3TM

STAC-M3TM represents a truly independent set of benchmarks for the financial services industry and bypasses all proprietary benchmarking tooling used by technology vendor. It is the industry standard testing solution for high-speed analytics on tick-by-tick market data.

“STAC” and all STAC names are trademarks or registered trademarks of the Securities Technology Analysis Center, LLC.”

Imperial College London

Imperial College London undertook a database scenario for storing and querying data from stock and cryptocurrency exchanges, running benchmarks across trade data, order book, complex query, writing efficiency – persistant storage, and storage efficiency.

According to Ruijie Xiong, Department of Computing, Imperial College London, “kdb+ is able to fast ingest data in bulk, with a good compression ratio for storage efficiency. Kdb+ has stable low query latency for all benchmarks including read queries, computational intensive queries, and complex queries”.

Check out the full benchmark here.

DBOps Benchmarks

DBOps is a public benchmark comparing the performance of a number of open-source database tools and technologies. It defines a set of reproducible tests to measure their performance executing typical queries that a data scientist would run.

The tests include mathematical/statistical calculations, group-by operations and joins over a range of data sets to show performance under different conditions.

Time Series Benchmark Suite

The Time Series Benchmark Suite (TSBS) is a collection of Go programs originally developed by InfluxData that are used to generate datasets and then benchmark read and write performance of various time-series databases. The intent is to make the TSBS extensible so that a variety of use cases (e.g., devops, IoT, finance, etc.), query types, and databases can be included and benchmarked.

What data are the tests based on?

- Synthetically-generated IoT stream of data containing telemetry from trucks in the form of “readings” and “diagnostics”.

- Contains out-of-order, missing and/or empty entries, forcing the tests to cater for real-world scenarios.