By Eduard Silantyev

Eduard Silantyev is an electronic trading systems developer and a cryptocurrency market microstructure specialist based in London. Follow Eduard on LinkedIn or Medium to read more of his blogs about cryptocurrencies. The original title of this blog is “Cryptocurrency Market Microstructure Data Collection Using CryptoFeed, Arctic, kdb+ and AWS EC2 | Handling asynchronous high-frequency data.”

Being an avid researcher of cryptocurrency market microstructure, I have considered many ways of collecting and cleaning high-frequency data from cryptocurrency venues. In this article, I will describe what I believe to be, by far, the best way to continuously collect and store such data. Specifically, we will collect asynchronous trade data from a number of exchanges.

Fragmentation

Before we begin exploring way to harness crypto data, we need to understand its characteristics. One of its main features is fragmentation. If you have any experience trading cryptocurrencies, you will know that there are dozens of exchanges that handle the lion’s share of traded volume. That means that in order to capture all the data that comes out of these venues, you will have to be listening to updates from each one of them. Fragmentation also means that data is asynchronous, or having non-deterministic arrival rates. Due to almost complete lack of regulation, central limit order book (CLOB) and order routing are alien concepts on the crypto scene.

Technological Inferiority

If you were around in early December 2017, you would have know that virtually each and every sizeable crypto venue had matching engine dysfunction when craze from retail and institutional investors overwhelmed fragile exchanges. That being said, technological issues and maintenance periods are a common occurrence, as simple Google search will yield. That means our data collection engine needs to be resonant with such instabilities.

Enter CryptoFeed

CryptoFeed is a Python based library built and maintained by Bryant Moscon. The library takes advantage of Python asyncio and websockets libraries to provide a unified feed from arbitrary number of supported exchanges. CryptoFeed has a robust re-connection logic in the case exchange APIs temporarily stop sending WebSocket packets for one reason or another. As a first step, clone the library and run this example. It demonstrates how easy it is to combine streams from different exchanges into one feed.

That was nice and easy, but you somehow need to persist the data you receive. CryptoFeed provides a nice example of storing data in Man AHL’s open-source Arctic database. Arctic wraps around MongoDB to provide a nice interface for storing Python objects. I even had the pleasure of contributing to Arctic during Man AHL’s 2018 Hackathon which was held back in April!

Arctic and Frequently Ticking Data

The problem of using Arctic for higher frequency data is that every time you have an update, Arctic has to do a bunch of overhead routines in order to store the Python object (usually a pandas DataFrame) in Mongo. This becomes non-feasible if you do not perform any data batching. When reading the data back, Arctic would need to decompress the objects. In my experience, this creates a huge delay in read/write times. There are, however, very nice solutions to this problem and one of them is using Kafka PubSub patterns to accumulate sufficient data before sending it to Arctic, but such patterns are beyond the scope of this article. That being said, we need another storage mechanism to be oblivious to asynchronous writing operations.

Enter kdb+

kdb+ is a columnar, in-memory database that is unparalleled in the storage of time-series data, owned and maintained by KX. Invented by Arthur Whitney, kdb+ is used across financial, telecom, manufacturing industries (and even F1 teams) to store, clean and analyze vast amounts of time-series data. In the realm of electronic trading, kdb+ serves purposes of message processing, data engineering, and data storage. kdb+ is powered by the built-in programming language q. The technology is largely proprietary, however, you may use the free 32-bit version for academic research purposes, which is what we intend to use it for.

The primary way to get your hands on kdb+ is via the official download. Recently, kdb+ was added to Anaconda package repository, from where it can be conveniently downloaded and installed. Next step is to create a custom callback for CryptoFeed such that it directs incoming data to kdb+.

CryptoFeed and kdb+

First of all we need a way to communicate between Python and q. qPython is a nice interface between the two environments. Install is as:

pip install qpythonWe are now ready to start designing the data engine, but before doing that make sure you have a kdb+ instance running and listening on a port:

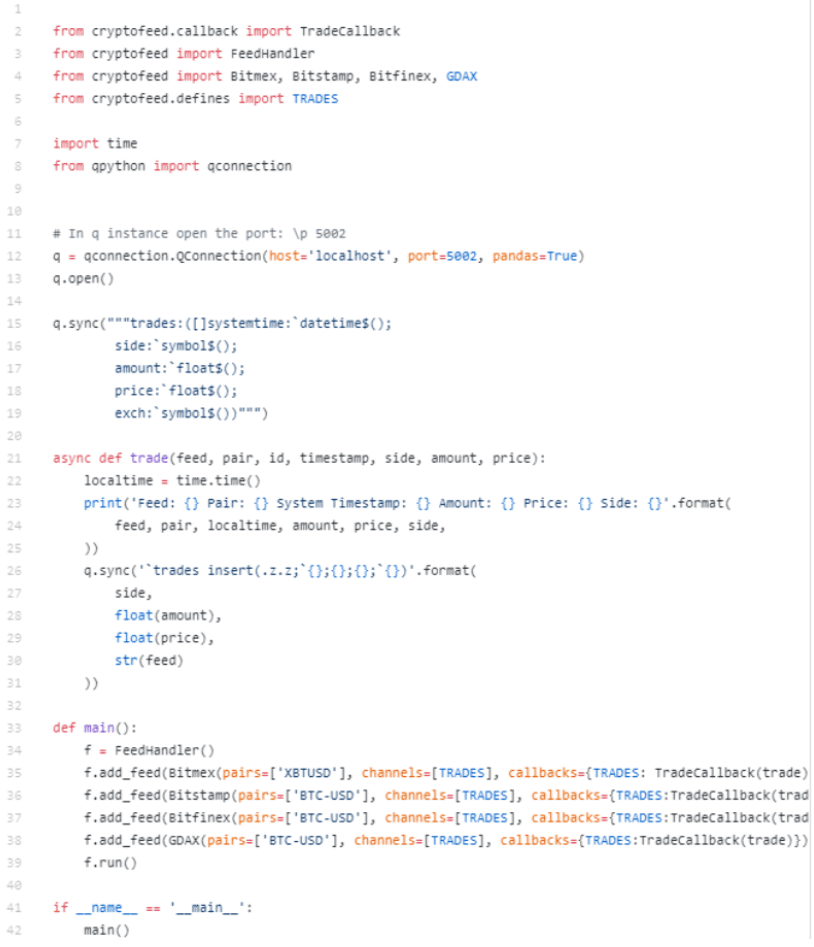

q)\p 5002Next up, we walk through a Python script that controls the flow of data. With the example below we collect trade data from BitMEX, BitStamp, Bitfinex and GDAX:

First, we start by importing the needed modules. Next up, we connect to kdb+ instance running locally and define a schema for our trades table. Next, we define a callback that will be executed every time we have an update. This really embodies the conciseness of CryptoFeed as it abstracts all implementation details from user, who is concerned with writing his own logic for message handling. As you can tell, we insert a row into trades on every new message. Finally, we combine different feeds into a unified one and run the script.

In the Python console you should be able to see trade messages arriving from different venues:

...

Feed: BITSTAMP Pair: BTC-USD System Timestamp: 1535282741.491973 Amount: 0.098794800000000002 Price: 6680.8900000000003 Side: SELL

Feed: BITMEX Pair: XBTUSD System Timestamp: 1535282741.588559 Amount: 2060 Price: 6676 Side: bid

Feed: BITMEX Pair: XBTUSD System Timestamp: 1535282742.5773056 Amount: 2000 Price: 6675.5 Side: ask

Feed: BITMEX Pair: XBTUSD System Timestamp: 1535282742.6642818 Amount: 100 Price: 6676 Side: bid

Feed: BITMEX Pair: XBTUSD System Timestamp: 1535282744.3457057 Amount: 4500 Price: 6676 Side: bid

Feed: BITMEX Pair: XBTUSD System Timestamp: 1535282744.3717065 Amount: 430 Price: 6675.5 Side: ask

Feed: BITMEX Pair: XBTUSD System Timestamp: 1535282744.372708 Amount: 70 Price: 6675.5 Side: ask

Feed: BITSTAMP Pair: BTC-USD System Timestamp: 1535282745.0435169 Amount: 0.67920000000000003 Price: 6674.3500000000004 Side: BUY

Feed: BITSTAMP Pair: BTC-USD System Timestamp: 1535282745.3331609 Amount: 0.31688699999999997 Price: 6674.3500000000004 Side: BUY

Feed: GDAX Pair: BTC-USD System Timestamp: 1535282745.5737412 Amount: 0.00694000 Price: 6684.78000000 Side: ask

...That’s looking good. To view the data, pull up the terminal window with kdb+ instance and view the trades table:

q) tradesThis will output the table:

systemtime side amount price exch

-------------------------------------------------------

2018.08.26T11:28:56.166 bid 1 6673.5 BITMEX

2018.08.26T11:28:56.200 bid 0.01971472 6684.9 BITFINEX

2018.08.26T11:28:56.201 bid 0.002 6684.9 BITFINEX

2018.08.26T11:28:56.202 bid 0.002 6684.9 BITFINEX

2018.08.26T11:28:56.203 bid 0.00128528 6684.9 BITFINEX

2018.08.26T11:28:56.205 ask 0.1889431 6684.8 BITFINEX

2018.08.26T11:28:56.206 ask 0.005 6684.7 BITFINEX

2018.08.26T11:28:56.207 ask 0.005 6684.7 BITFINEX

2018.08.26T11:28:56.208 ask 0.49 6684.7 BITFINEX

2018.08.26T11:28:56.209 ask 0.5 6684.7 BITFINEX

2018.08.26T11:28:56.210 ask 0.1 6683.8 BITFINEX

2018.08.26T11:28:56.211 ask 0.9577479 6683 BITFINEX

2018.08.26T11:28:56.212 ask 0.03 6683 BITFINEX

2018.08.26T11:28:56.213 ask 0.01225211 6683.2 BITFINEX

2018.08.26T11:28:56.214 ask 1 6683.7 BITFINEX

2018.08.26T11:28:56.215 ask 0.00015486 6683.8 BITFINEX

2018.08.26T11:28:56.216 ask 0.00484514 6683.8 BITFINEX

2018.08.26T11:28:56.217 bid 0.00071472 6684.9 BITFINEX

2018.08.26T11:28:56.218 bid 0.002 6684.9 BITFINEX

2018.08.26T11:28:56.219 ask 0.05501941 6683.8 BITFINEXNow what if you wanted to view trades arriving only from GDAX? You could do so via select statement:

q)select from trades where exch=`GDAXAs expected we will see trades only from GDAX:

systemtime side amount price exch

----------------------------------------------------

2018.08.26T11:29:05.641 ask 0.00694 6683.88 GDAX

2018.08.26T11:29:12.254 ask 0.0092 6683.88 GDAX

2018.08.26T11:29:14.460 ask 0.045691 6683.88 GDAX

2018.08.26T11:29:15.322 ask 0.00694 6683.88 GDAX

2018.08.26T11:29:18.373 bid 0.01574738 6683.89 GDAX

2018.08.26T11:29:23.770 bid 0.0071 6683.89 GDAX

2018.08.26T11:29:25.599 ask 0.00237 6683.88 GDAX

2018.08.26T11:29:29.895 ask 0.03472 6683.93 GDAX

2018.08.26T11:29:33.464 bid 0.001 6683.94 GDAX

2018.08.26T11:29:33.467 bid 0.001 6684.04 GDAX

2018.08.26T11:29:33.475 bid 0.00036078 6686 GDAX

2018.08.26T11:29:34.224 bid 0.0126 6686 GDAX

2018.08.26T11:29:34.529 bid 0.00565852 6686 GDAX

2018.08.26T11:29:44.334 bid 0.00745594 6686 GDAX

2018.08.26T11:29:45.061 ask 0.0091 6685.99 GDAX

2018.08.26T11:29:47.790 ask 0.0046 6685.99 GDAX

2018.08.26T11:29:52.873 bid 0.1739248 6686 GDAX

2018.08.26T11:29:52.876 bid 0.00340341 6686 GDAX

2018.08.26T11:29:52.883 bid 0.001 6688.87 GDAX

2018.08.26T11:29:52.886 bid 0.03248652 6690 GDAXYou may have noticed that we are using system time as timestamp. This is purely to normalise times from different exchanges as they are located in different time zones. You are welcome to dig in and get timestamps from each exchange. Also, since kdb+ stores data in memory, you will need a mechanism that will flush the data to disk every given period to free space on RAM. For this purpose, you may want to look at tick architecture provided by KX.

AWS EC2 for Cloud

Now you may be wondering whether it is possible to collect data continuously from your home PC / laptop. That depends on quality of your internet connection. If you live in the UK like myself, you will know that ISPs are rather non-reliable. So next up, it makes sense to set up a server that will ensure continuous up-time. In my opinion, AWS EC2 is a great option for this. You can also get a free 12 months trial and to try out a small instance, which I can assert is enough for the task at hand. Upload your files / scripts via SFTP protocol and you are good to go.

Concluding Thoughts

In this article we have explored a convenient and robust way of collecting crypto data from multiple venues. Along the way, we have used CryptoFeed Python library, MAN AHL Arctic, kdb+ and AWS EC2. As for next steps, you can try out collecting order book data along with trade data. Please let me know in the comments below whether you have any questions regarding this. It would be good to hear constructive criticism on this design. I will be keen to hear what architecture you use for data collection. Don’t forget to clap!

Small changes were made to this re-blog to conform with KX Style.