By Steve Wilcockson

AI has had many false dawns, but we are far removed from early days of “fuzzy logic.”

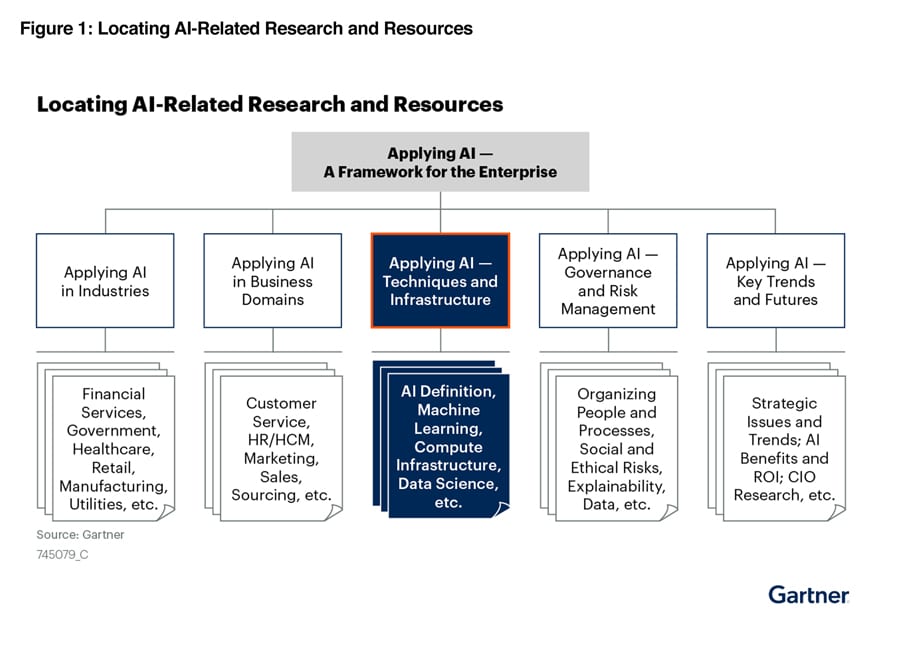

With the recent explosion of generative AI, AI is clearly evolving from a sideline research curiosity into an exciting core business imperative. The Gartner “Applying AI — A Framework for the Enterprise”, illustrated below, outlines how enterprises should approach their AI initiatives:

In outlining the fundamental techniques and practices for implementing AI within the enterprise, the report states, “IT leaders struggle to implement AI within AI applications, wasting time and money on AI projects that are never put in production.”

Organizations want simplicity and flexible tools for more tasks and use cases, from research to production. At KX, we help to “squish your stack” and get more data science applications into production sooner. Here’s how:

AI Simulation

Decades into the lifespans of digital transformation, there is little “low hanging fruit” left. Efficiencies are harder to find, anomalies harder to spot, and decisions involve more people, from Subject Matter Experts to IT Teams, CTOs, and FinOps teams.

Simulation and synthetic data have always been important. From scenario stress testing to digital-twin modelling, results are inferred from simulations over swathes of historical and synthetically generated data – KX does this 100x faster at a 10th of the cost of other infrastructures. Common simulation case studies include:

- Monte Carlo simulations for pricing and risk analysis

- Wind Tunnel analytics in FI

- Stress testing across more scenarios, particularly amongst high volume, outliers and burst conditions

Data Science

Your investment in AI infrastructure is wasted if data scientists cannot explore data and models easily and flexibly. With KX, tooling is driven from SQL and Python, data, and modelling languages of choice.

- Researchers, developers, and analysts use their existing skills and code bases, from model research to reporting, and from cloud data pipelines to real-time model deployments

- With PyKX, get 100x power of kdb to Python developers and notebooks

- With Pro-code interfaces, users can collaborate and augment functionality from different programming languages empowered by the unsurpassed performance of kdb

AI Engineering

As AI goes mainstream, enterprise features and guardrails are needed to ensure stability, robustness, and security. For governance, cataloguing and security, kdb integrates with tools of choice to share data and AI insights throughout the enterprise safely, securely and with speed.

- Industrialize application development by supporting the reuse of existing libraries and skills

- Adopt open standards and cloud protocols to simplify integration of governance protocols, facilitate access and authentication, ensure security, safety, resilience, and high availability

- Abstract infrastructure concerns so that developers can focus on getting projects to production sooner, providing golden insights and ensuring business value

Compute Infrastructure

AI consumes very Big Data. That, unfortunately, means huge storage and compute requirements. With KX, storage and compute efficiencies mean cost savings and lower environmental overheads.

- KX operates directly on data, eliminating the need for latency-inducing transfer and costly data duplication

- It’s column design provides optimized storage and better compression levels

- With a low KX footprint, CPU use is optimized meaning less need for power-hungry GPUs

Generative AI

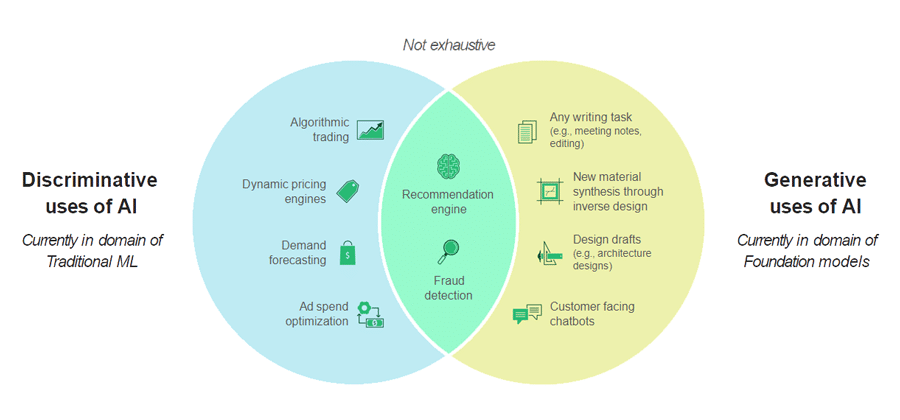

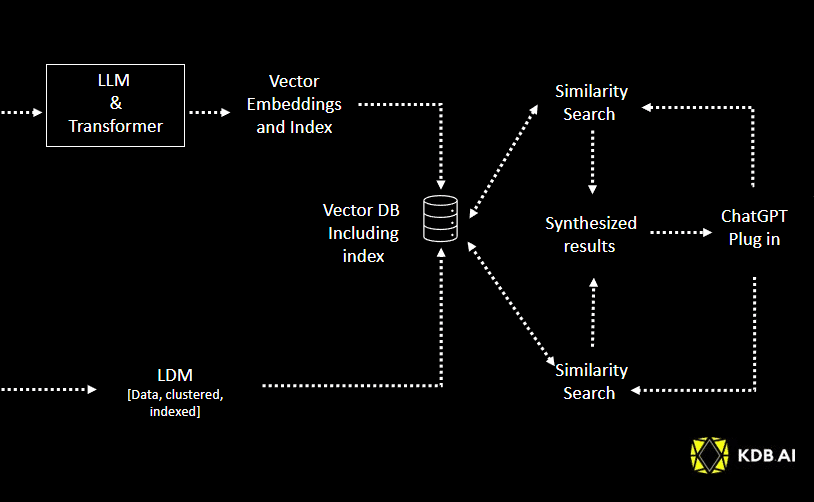

With AI no longer simply discriminative or code-queried, but also generative and prompt-queried, KDB.AI has been built to apply what’s a given for GenAI workflows with greater efficiency, and augment with direct raw vector processing power. Sample use cases range from more accurate fraud detection and insightful risk management to automated financial analysis and improved regulatory compliance – all harnessing real time information, and particularly temporal data, for improved decision making.

- With proven vector-based representation and processing, KDB.AI provides more efficient vector database vector embeddings storage and search for all types of LLMs

- Additionally, storing and computing over raw data for large data models, including, but not limited to, time-series data models

- Prompt integration for better prompt engineering and more flexible intuitive querying

Gartner, Applying AI — Techniques and Infrastructure, Chirag Dekate, Bern Elliot, 25 April 2023. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.