By Abin Saju

Web scraping is a method through which human readable content is extracted from a web page using an automated system. The system can be implemented using a bot/web crawler which traverses through domains or through a web browser which mimics human interaction with a page. There are many use cases for the scraped data, one of the biggest providers of scraped data is Google, which indexes and sometimes archives websites which are available for retrieval by a user. Other than search providers, e-commerce is another big sector that uses scraped data as a valuable tool in determining if the price of a product matches or beats the one offered by a competitor.

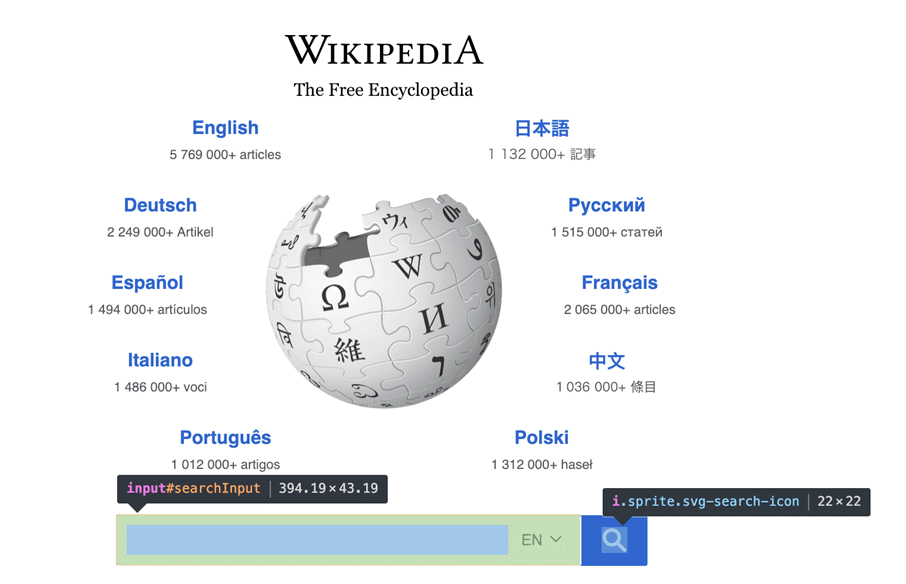

In my spare time I have implemented a rudimentary Python framework using Selenium which accesses a web page, interacts with it, and scrapes the data I am interested in. Selenium makes it quite easy to interact with a page, for example, looking at Wikipedia using Chrome’s inbuilt developer tools:

The developer tool highlights the id’s used by the webpage for the various widgets, these id’s can be used to interact with the page instead of the mouse. To do a simple search once my session is active, I simply do:

driver.get("https://wikipedia.org")

driver.find_element_by_name('search').send_keys("KX Systems")

driver.find_element_by_css_selector('.sprite.svg-search-icon').click()Using this technique interaction with virtually any website can be automated. For example, I could create a historical database that stores the prices of hotels. The system could alert me when prices are starting to rise or if there was a sudden drop for a particular listing. This can be done by scraping the html source code and converting it to a structured format using Selenium and Python’s lxml library. It would be trivial to configure the destination, room type, check-in and check-out date.

One way to store the scraped data is to use Pandas and save them in csv or Excel format. However, if in the future if I wanted to expand the program to look at 100’s of websites at much shorter intervals, I would need a much more efficient method of storing the data. So, I am going to use kdb+ along with embedPy. Using this interface means I don’t need to re write my existing code and allows me to quickly setup a database.

// Load embedPy

\l p.q

// Use European date format

\z 1

//Create folder if it doesn't exist

system "mkdir -p db";

// Empty folder if running the first time

\l db;

// If table to store data doesn't exist, create it.

if[not `HotelInfo in .Q.pt;

// Set down empty schema if table doesn't exist

.Q.dd[hsym `$string .z.d;`HotelInfo`] set .Q.en[`:.] flip `t`destination`hotel`checkin`checkout`price!"pssddj"$\:();

system "l ."

];

// Load definitions from the python script

pyscript:.p.import[`hotelscrape];

.pyscrape.start:pyscript`:startUp;

.pyscrape.getData:pyscript`:enterDestAndScrape;The above code sets up a database if it doesn’t already exist and loads the embedPy objects. The scraped data is passed back as a list which can then be converted to a table and upserted to disk. The use of embedPy reduces intermediate steps like saving down and reading in of csv’s.

The program can then be run recursively at set time periods. For this I use the internal .z.ts function:

\l scrape.q

.z.ts:{runProgram[hotelInfo]}

\t 14400000 / (4*60*60*1000) run every 4 hoursAnd voila! The program is now able to scrape data on multiple destinations at regular intervals and store it in a state-of-the-art db.

Further Reading:

A comparison of Python and q for data analysis: https://kx.com/blog/a-comparison-of-python-and-q-for-data-analysis/

embedPy GitHub repo: https://github.com/kxsystems/embedpy

How Google search works: https://www.google.com/intl/en_uk/search/howsearchworks/crawling-indexing/

More on how various elements can be located using Selenium: https://selenium-python.readthedocs.io/locating-elements.html